模型复现:ReXNet

时间:2025-07-29 作者:游乐小编

本文复现了ReXNet模型,这是一款参数量小、精度高的新BackBone。介绍了其针对深度网络表达瓶颈问题提出的设计准则,还展示了模型搭建的基础模块(如ConvBN、Swish等)、主体结构及预设模型,并进行了模型验证,在ImageNet验证集上取得相应精度。

引入

- 闲得无事,我又来复现模型了

- 这次的目标又是一个新鲜出炉的新模型 ReXNet

- 总体看上去就是一个很不错的新 BackBone,参数量比较小,但是精度却相当高

模型介绍

- 相关资料:

- 介绍文章:【ReXNet|消除表达瓶颈,提升性能指标】

- 论文:【ReXNet: Diminishing Representational Bottleneck on Convolutional Neural Network】

- 最新代码:【clovaai/rexnet】

- 论文摘要:

- 针对深度网络中存在的表达瓶颈问题,该文提出了一组用于提升模型性能的设计准则。

- 作者认为:传统的网络架构设计范式会产生表达瓶颈问题,进而影响模型的性能。

- 为研究表达瓶颈问题,作者研究了上万随机网络生成的特征的matric rank,同时进一步研究了网络的层通道配置方案。

- 基于前述研究发现,作者提出了一组简单而有效的设计原则消除表达瓶颈问题。

- 在基准网络上采用上述设计原则进行轻微调整即可取得ImageNet上的性能显著提升;

- 此外,COCO目标检测与迁移学习的实验更进一步验证了所提方案的有效性:消除表达瓶颈问题有助于提升模型性能。

快速使用

- 推荐使用 【Paddle-Image-Models】 项目来快速加载本模型

- 具体使用方法请参考:【【Paddle-Image-Models】飞桨预训练图像模型库】

模型搭建

导入必要的包

In [1]

import paddleimport paddle.nn as nnfrom math import ceil

基础模块

- 包含 ConvBN、Swish 和 SE 模块

In [2]

def ConvBN(out, in_channels, channels, kernel=1, stride=1, pad=0, num_group=1, act=None): out.append(nn.Conv2D(in_channels, channels, kernel, stride, pad, groups=num_group, bias_attr=False)) out.append(nn.BatchNorm2D(channels)) if act == 'swish': out.append(Swish()) elif act == 'relu': out.append(nn.ReLU()) elif act == 'relu6': out.append(nn.ReLU6())class Swish(nn.Layer): def __init__(self): super(Swish, self).__init__() def forward(self, x): return x * nn.functional.sigmoid(x)class SE(nn.Layer): def __init__(self, in_channels, channels, se_ratio=12): super(SE, self).__init__() self.avg_pool = nn.AdaptiveAvgPool2D(1) self.fc = nn.Sequential( nn.Conv2D(in_channels, channels // se_ratio, kernel_size=1, padding=0), nn.BatchNorm2D(channels // se_ratio), nn.ReLU(), nn.Conv2D(channels // se_ratio, channels, kernel_size=1, padding=0), nn.Sigmoid() ) def forward(self, x): y = self.avg_pool(x) y = self.fc(y) return x * y

LinearBottleneck

In [3]

class LinearBottleneck(nn.Layer): def __init__(self, in_channels, channels, t, stride, use_se=True, se_ratio=12): super(LinearBottleneck, self).__init__() self.use_shortcut = stride == 1 and in_channels <= channels self.in_channels = in_channels self.out_channels = channels out = [] if t != 1: dw_channels = in_channels * t ConvBN(out, in_channels=in_channels, channels=dw_channels, act='swish') else: dw_channels = in_channels ConvBN(out, in_channels=dw_channels, channels=dw_channels, kernel=3, stride=stride, pad=1, num_group=dw_channels) if use_se: out.append(SE(dw_channels, dw_channels, se_ratio)) out.append(nn.ReLU6()) ConvBN(out, in_channels=dw_channels, channels=channels) self.out = nn.Sequential(*out) def forward(self, x): out = self.out(x) if self.use_shortcut: out[:, 0:self.in_channels] += x return out

ReXNet

- 模型主体

In [4]

class ReXNetV1(nn.Layer): def __init__(self, input_ch=16, final_ch=180, width_mult=1.0, depth_mult=1.0, class_dim=1000, use_se=True, se_ratio=12, dropout_ratio=0.2): super(ReXNetV1, self).__init__() layers = [1, 2, 2, 3, 3, 5] strides = [1, 2, 2, 2, 1, 2] use_ses = [False, False, True, True, True, True] layers = [ceil(element * depth_mult) for element in layers] strides = sum([[element] + [1] * (layers[idx] - 1) for idx, element in enumerate(strides)], []) if use_se: use_ses = sum([[element] * layers[idx] for idx, element in enumerate(use_ses)], []) else: use_ses = [False] * sum(layers[:]) ts = [1] * layers[0] + [6] * sum(layers[1:]) self.depth = sum(layers[:]) * 3 stem_channel = 32 / width_mult if width_mult < 1.0 else 32 inplanes = input_ch / width_mult if width_mult < 1.0 else input_ch features = [] in_channels_group = [] channels_group = [] # The following channel configuration is a simple instance to make each layer become an expand layer. for i in range(self.depth // 3): if i == 0: in_channels_group.append(int(round(stem_channel * width_mult))) channels_group.append(int(round(inplanes * width_mult))) else: in_channels_group.append(int(round(inplanes * width_mult))) inplanes += final_ch / (self.depth // 3 * 1.0) channels_group.append(int(round(inplanes * width_mult))) ConvBN(features, 3, int(round(stem_channel * width_mult)), kernel=3, stride=2, pad=1, act='swish') for block_idx, (in_c, c, t, s, se) in enumerate(zip(in_channels_group, channels_group, ts, strides, use_ses)): features.append(LinearBottleneck(in_channels=in_c, channels=c, t=t, stride=s, use_se=se, se_ratio=se_ratio)) pen_channels = int(1280 * width_mult) ConvBN(features, c, pen_channels, act='swish') features.append(nn.AdaptiveAvgPool2D(1)) self.features = nn.Sequential(*features) self.output = nn.Sequential( nn.Dropout(dropout_ratio), nn.Conv2D(pen_channels, class_dim, 1)) def forward(self, x): x = self.features(x) x = self.output(x).squeeze() return x

预设模型

In [5]

def rexnet_100(pretrained=False, **kwargs): model = ReXNetV1(width_mult=1.0, **kwargs) return modeldef rexnet_130(pretrained=False, **kwargs): model = ReXNetV1(width_mult=1.3, **kwargs) return modeldef rexnet_150(pretrained=False, **kwargs): model = ReXNetV1(width_mult=1.5, **kwargs) return modeldef rexnet_200(pretrained=False, **kwargs): model = ReXNetV1(width_mult=2.0, **kwargs) return modeldef rexnet_300(pretrained=False, **kwargs): model = ReXNetV1(width_mult=3.0, **kwargs) return model

模型验证

- 加载转换后的最新预训练模型,使用 ImageNet 1k 验证集进行模型测试

- 验证精度是否与最新标称一致

解压数据集

In [ ]

!mkdir ~/data/ILSVRC2012!tar -xf ~/data/data68594/ILSVRC2012_img_val.tar -C ~/data/ILSVRC2012

构建数据集

- 构建一个 ILSVRC2012 ImageNet 1k 的数据集

In [6]

import osimport paddleimport numpy as npfrom PIL import Imageclass ILSVRC2012(paddle.io.Dataset): def __init__(self, root, label_list, transform): self.transform = transform self.root = root self.label_list = label_list self.load_datas() def load_datas(self): self.imgs = [] self.labels = [] with open(self.label_list, 'r') as f: for line in f: img, label = line[:-1].split(' ') self.imgs.append(os.path.join(self.root, img)) self.labels.append(int(label)) def __getitem__(self, idx): label = self.labels[idx] image = self.imgs[idx] image = Image.open(image).convert('RGB') image = self.transform(image) return image.astype('float32'), np.array(label).astype('int64') def __len__(self): return len(self.imgs) 模型验证

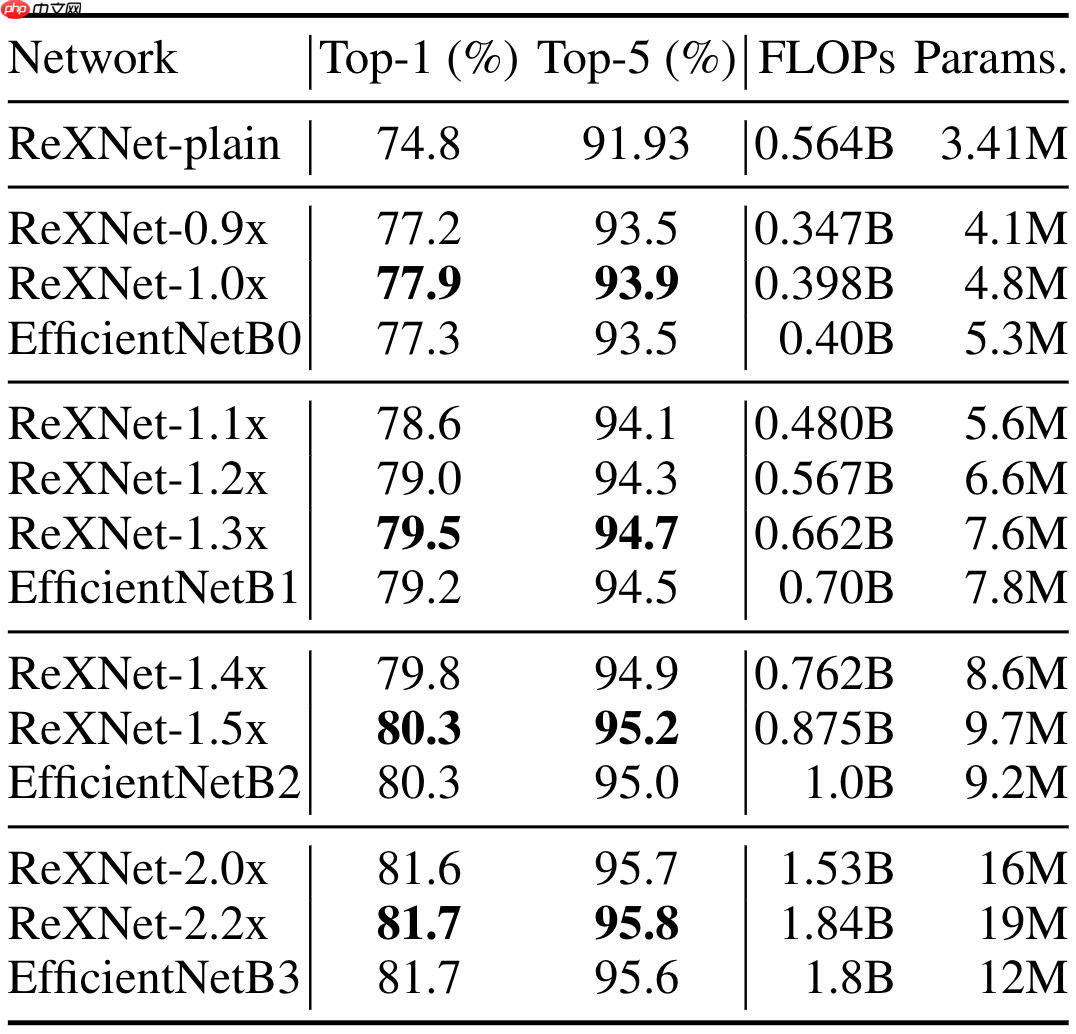

- ReXNet 的最新标称精度如下:

In [7]

import paddle.vision.transforms as Tmodel = rexnet_100()model.set_dict(paddle.load('data/data75438/rexnetv1_1.0x.pdparams'))model = paddle.Model(model)model.prepare(metrics=paddle.metric.Accuracy(topk=(1, 5)))val_transforms = T.Compose([ T.Resize(256, interpolation='bicubic'), T.CenterCrop(224), T.ToTensor(), T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])])val_dataset = ILSVRC2012('data/ILSVRC2012', transform=val_transforms, label_list='data/data68594/val_list.txt')model.evaluate(val_dataset, batch_size=512) Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/fluid/layers/utils.py:77: DeprecationWarning: Using or importing the ABCs from 'collections' instead of from 'collections.abc' is deprecated, and in 3.8 it will stop working return (isinstance(seq, collections.Sequence) and

step 10/98 - acc_top1: 0.7811 - acc_top5: 0.9396 - 7s/stepstep 20/98 - acc_top1: 0.7836 - acc_top5: 0.9396 - 7s/stepstep 30/98 - acc_top1: 0.7813 - acc_top5: 0.9392 - 7s/stepstep 40/98 - acc_top1: 0.7793 - acc_top5: 0.9383 - 7s/stepstep 50/98 - acc_top1: 0.7792 - acc_top5: 0.9389 - 7s/stepstep 60/98 - acc_top1: 0.7797 - acc_top5: 0.9386 - 7s/stepstep 70/98 - acc_top1: 0.7785 - acc_top5: 0.9386 - 7s/stepstep 80/98 - acc_top1: 0.7789 - acc_top5: 0.9385 - 7s/stepstep 90/98 - acc_top1: 0.7786 - acc_top5: 0.9385 - 7s/stepstep 98/98 - acc_top1: 0.7786 - acc_top5: 0.9387 - 6s/stepEval samples: 50000

{'acc_top1': 0.77862, 'acc_top5': 0.93868} 小编推荐:

热门推荐

更多 热门文章

更多 -

- 神角技巧试炼岛高级宝箱在什么位置

-

2021-11-05 11:52

手游攻略

-

- 王者荣耀音乐扭蛋机活动内容奖励详解

-

2021-11-19 18:38

手游攻略

-

- 坎公骑冠剑11

-

2021-10-31 23:18

手游攻略

-

- 原神卡肉是什么意思

-

2022-06-03 14:46

游戏资讯

-

- 《臭作》之100%全完整攻略

-

2025-06-28 12:37

单机攻略