PointPillars:基于点云的3D快速目标检测网络

时间:2025-07-18 作者:游乐小编

PointPillars是一个基于点云的快速目标检测网络,在配置为Intel i7 CPU和1080ti GPU上的预测速度为62Hz,在无人驾驶领域中常常能够使用上它,是一个落地且应用广泛的一个3D快速目标检测网络。

PointPillars:基于点云的快速目标检测网络(训练版)

1、项目总览

①、PointPillars简介

PointPillars是一个基于点云的快速目标检测网络,在配置为Intel i7 CPU和1080ti GPU上的预测速度为62Hz,在无人驾驶领域中常常能够使用上它,是一个落地且应用广泛的一个3D快速目标检测网络。

PointPillars网络的一个非常好的落地应用:Apollo 6.0 lidar中的detector部分

②、项目效果

网络能对点云图进行3D快速目标检测:

2、网络简介

①、网络结构

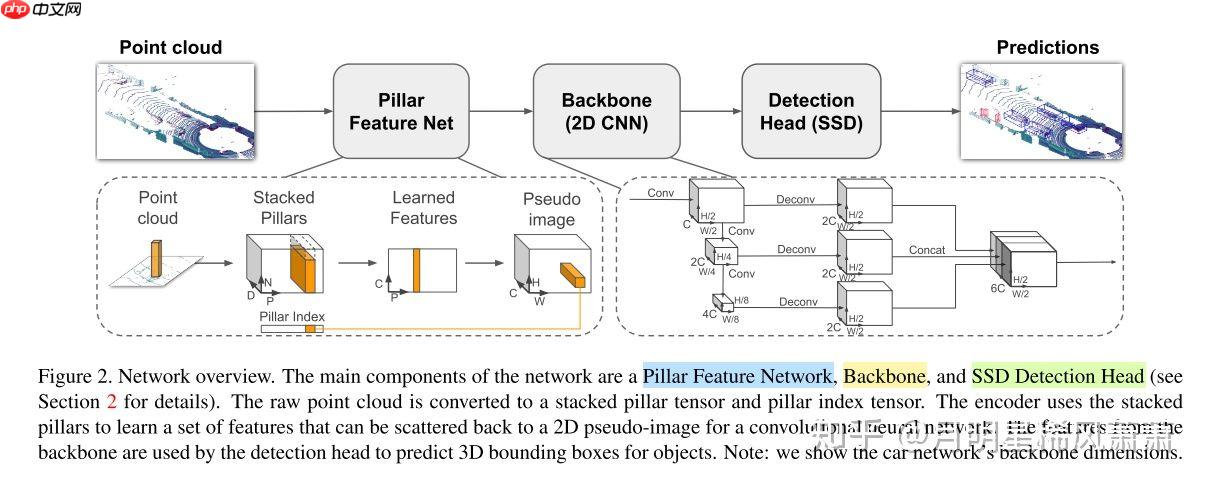

PointPillars网络结构部分主要分为三大部分:1、采用Pillar编码方式编码PointCloud;2、使用2D Convolution对编码后的伪2D图进行处理;3、使用SSD的检测头对目标进行检测。

1、采用Pillar编码方式编码PointCloud:在点云的俯视图的平面进行投影使之变成伪2D图,对这种投影进行编码用的是Pillar方法,即在投影幕上划分为 H * W 的网格,然后对于每个网格所对应的柱子中的每一个点取原特征(x,y,z,r,x_c,y_c,z_c,x_p,y_p)共9个,再然后每个柱子中点多于N的进行采样,少于N的进行填充0,形成了(9,N,H * W)的特征图。

2、使用2D Convolution进行处理:这一部分算得上是网络中的backbone,backbone包含两个子网络一个是top-down网络,另一个是second网络。其中top-down网络结构为了捕获不同尺度下的特征信息,主要是由卷积层、归一化、非线性层构成的,second网络用于将不同尺度特征信息融合,主要由反卷积来实现。

3、使用SSD的检测头对目标进行检测。

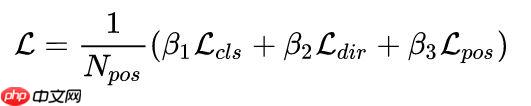

②、损失函数

使用localization loss对3D bounding box进行回归,由于box的±Π在localization loss无法被学习到,所以使用direction loss对此进行学习,在classification loss中使用focal loss对分类进行学习。

3、网络训练

①、数据集的准备

PointPillars网络在KITTI数据集中的3D Detection数据集上面进行训练,数据集中包含7481张训练图片以及7518张测试图片,一共有80256个标记物体,并且测试模式包含普通的视角以及鸟瞰视角。

!rm -rf kitti/!mkdir -p kitti/training/velodyne_reduced!mkdir -p kitti/testing/velodyne_reduced登录后复制In [ ]

!unzip data/data50186/data_object_calib.zip -d kitti/登录后复制In [ ]

!unzip data/data50186/image_training.zip -d kitti/training/!unzip data/data50186/data_object_label_2.zip -d kitti/training/!unzip data/data50186/velodyne_training_1.zip -d kitti/training/!unzip data/data50186/velodyne_training_2.zip -d kitti//training/!unzip data/data50186/velodyne_training_3.zip -d kitti/training/登录后复制In [ ]

!unzip data/data50186/image_testing.zip -d kitti/testing/!unzip data/data50186/velodyne_testing_1.zip -d kitti/testing/!unzip data/data50186/velodyne_testing_2.zip -d kitti/testing/!unzip data/data50186/velodyne_testing_3.zip -d kitti/testing/登录后复制In [ ]

!mv kitti/training/training/* kitti/training/!rm -rf kitti/training/training/!mv kitti/testing/testing/* kitti/testing/!rm -rf kitti/testing/testing/登录后复制In [ ]

!mkdir kitti/training/velodyne!mv kitti/training/velodyne_training_1/* kitti/training/velodyne/!mv kitti/training/velodyne_training_2/* kitti/training/velodyne/!mv kitti/training/velodyne_training_3/* kitti/training/velodyne/!rm -rf kitti/training/velodyne_training_1!rm -rf kitti/training/velodyne_training_2!rm -rf kitti/training/velodyne_training_3!mkdir kitti/testing/velodyne!mv kitti/testing/velodyne_testing_1/* kitti/testing/velodyne!mv kitti/testing/velodyne_testing_2/* kitti/testing/velodyne!mv kitti/testing/velodyne_testing_3/* kitti/testing/velodyne!rm -rf kitti/testing/velodyne_testing_1!rm -rf kitti/testing/velodyne_testing_2!rm -rf kitti/testing/velodyne_testing_3登录后复制

②、安装必要的库

In [ ]!pip install shapely pybind11 protobuf scikit-image numba pillow fire scikit-image登录后复制

③、数据集处理与准备

对KITTI数据集进行处理。

%cd pointpillars/登录后复制In [ ]

!python create_data.py create_kitti_info_file --data_path=kitti登录后复制In [ ]

!python create_data.py create_reduced_point_cloud --data_path=kitti登录后复制In [ ]

!python create_data.py create_groundtruth_database --data_path=kitti登录后复制

④、训练

In [2]!rm -r ./params/model!python train.py train --cfg_file=./params/configs/pointpillars_kitti_car_xy16.yaml --model_dir=./params/model登录后复制

step=2, steptime=4.51, cls_loss=4.61e+02, cls_loss_rt=3.25e+02, loc_loss=23.5, loc_loss_rt=25.9, rpn_acc=0.253, prec@10=0.00382, rec@10=0.978, prec@30=0.0038, rec@30=0.919, prec@50=0.00402, rec@50=0.776, prec@70=0.00446, rec@70=0.491, prec@80=0.0052, rec@80=0.375, prec@90=0.00599, rec@90=0.232, prec@95=0.00588, rec@95=0.132, loss.loc_elem=[1.65, 1.5, 1.39, 1.61, 1.78, 1.59, 3.41], loss.cls_pos_rt=2.57e+02, loss.cls_neg_rt=68.0, loss.dir_rt=1.71, num_vox=11987, num_pos=87, num_neg=16460, num_anchors=16680, lr=0.0002, image_idx=Tensor(shape=[1], dtype=int64, place=CUDAPlace(0), stop_gradient=True, [2156])step=4, steptime=5.91, cls_loss=3.5e+02, cls_loss_rt=2.16e+02, loc_loss=23.9, loc_loss_rt=23.3, rpn_acc=0.339, prec@10=0.00442, rec@10=0.958, prec@30=0.00439, rec@30=0.872, prec@50=0.00457, rec@50=0.669, prec@70=0.00504, rec@70=0.356, prec@80=0.00547, rec@80=0.241, prec@90=0.00625, rec@90=0.141, prec@95=0.00663, rec@95=0.0843, loss.loc_elem=[1.31, 1.61, 1.22, 1.38, 1.49, 1.43, 3.2], loss.cls_pos_rt=1.67e+02, loss.cls_neg_rt=48.6, loss.dir_rt=1.4, num_vox=12134, num_pos=104, num_neg=28902, num_anchors=29156, lr=0.0002, image_idx=Tensor(shape=[1], dtype=int64, place=CUDAPlace(0), stop_gradient=True, [2865])step=6, steptime=4.38, cls_loss=2.91e+02, cls_loss_rt=1.88e+02, loc_loss=23.2, loc_loss_rt=20.4, rpn_acc=0.416, prec@10=0.00414, rec@10=0.951, prec@30=0.00405, rec@30=0.84, prec@50=0.00437, rec@50=0.602, prec@70=0.00533, rec@70=0.303, prec@80=0.00576, rec@80=0.194, prec@90=0.00664, rec@90=0.109, prec@95=0.00687, rec@95=0.0621, loss.loc_elem=[1.26, 1.02, 1.2, 1.2, 1.27, 1.15, 3.09], loss.cls_pos_rt=1.44e+02, loss.cls_neg_rt=43.6, loss.dir_rt=1.15, num_vox=14429, num_pos=95, num_neg=26713, num_anchors=26951, lr=0.0002, image_idx=Tensor(shape=[1], dtype=int64, place=CUDAPlace(0), stop_gradient=True, [7461])step=8, steptime=4.34, cls_loss=2.41e+02, cls_loss_rt=86.1, loc_loss=21.7, loc_loss_rt=18.3, rpn_acc=0.505, prec@10=0.00402, rec@10=0.951, prec@30=0.00393, rec@30=0.818, prec@50=0.00439, rec@50=0.527, prec@70=0.00527, rec@70=0.242, prec@80=0.00567, rec@80=0.15, prec@90=0.00651, rec@90=0.0817, prec@95=0.00678, rec@95=0.046, loss.loc_elem=[0.927, 1.13, 1.04, 1.11, 1.09, 1.11, 2.73], loss.cls_pos_rt=64.0, loss.cls_neg_rt=22.1, loss.dir_rt=1.61, num_vox=10764, num_pos=96, num_neg=27143, num_anchors=27391, lr=0.0002, image_idx=Tensor(shape=[1], dtype=int64, place=CUDAPlace(0), stop_gradient=True, [6295])step=10, steptime=6.53, cls_loss=2.07e+02, cls_loss_rt=68.9, loc_loss=20.1, loc_loss_rt=11.7, rpn_acc=0.597, prec@10=0.00373, rec@10=0.948, prec@30=0.00372, rec@30=0.785, prec@50=0.00438, rec@50=0.459, prec@70=0.0053, rec@70=0.204, prec@80=0.0056, rec@80=0.123, prec@90=0.00642, rec@90=0.0664, prec@95=0.00672, rec@95=0.0374, loss.loc_elem=[0.542, 0.715, 0.656, 0.708, 0.654, 0.682, 1.91], loss.cls_pos_rt=50.4, loss.cls_neg_rt=18.6, loss.dir_rt=0.901, num_vox=13760, num_pos=73, num_neg=37547, num_anchors=37763, lr=0.0002, image_idx=Tensor(shape=[1], dtype=int64, place=CUDAPlace(0), stop_gradient=True, [4677])step=12, steptime=4.59, cls_loss=1.8e+02, cls_loss_rt=43.2, loc_loss=19.3, loc_loss_rt=16.1, rpn_acc=0.668, prec@10=0.00353, rec@10=0.947, prec@30=0.00356, rec@30=0.731, prec@50=0.00442, rec@50=0.404, prec@70=0.00536, rec@70=0.177, prec@80=0.00562, rec@80=0.105, prec@90=0.00643, rec@90=0.0562, prec@95=0.0067, rec@95=0.0314, loss.loc_elem=[0.94, 1.0, 0.978, 0.927, 0.933, 0.843, 2.44], loss.cls_pos_rt=30.7, loss.cls_neg_rt=12.6, loss.dir_rt=1.17, num_vox=15485, num_pos=104, num_neg=26403, num_anchors=26661, lr=0.0002, image_idx=Tensor(shape=[1], dtype=int64, place=CUDAPlace(0), stop_gradient=True, [724])step=14, steptime=4.47, cls_loss=1.6e+02, cls_loss_rt=32.9, loc_loss=18.6, loc_loss_rt=14.5, rpn_acc=0.71, prec@10=0.00351, rec@10=0.934, prec@30=0.00355, rec@30=0.669, prec@50=0.00444, rec@50=0.354, prec@70=0.00537, rec@70=0.153, prec@80=0.00565, rec@80=0.0913, prec@90=0.00647, rec@90=0.0489, prec@95=0.00678, rec@95=0.0275, loss.loc_elem=[1.03, 0.846, 0.812, 0.898, 0.903, 0.875, 1.89], loss.cls_pos_rt=21.6, loss.cls_neg_rt=11.3, loss.dir_rt=0.877, num_vox=13753, num_pos=93, num_neg=37505, num_anchors=37749, lr=0.0002, image_idx=Tensor(shape=[1], dtype=int64, place=CUDAPlace(0), stop_gradient=True, [906])登录后复制

⑤、写在结尾

因为这个写的比较匆忙,可能还有bug没修复,欢迎大佬们来尝试然后给我们提提意见哈哈,预测版本稍后会写出来(其实已经在代码里了,不过还没有fix)。

我们的项目的GitHub地址:AgentMaker/PAPC

4、Reference

论文

nutonomy/second.pytorch

mmlab/mmdetection3d

SmallMunich/nutonomy_pointpillars

hova88/Lidardet

小编推荐:

相关攻略

更多 - Claude怎么输入代码让它理解 正确格式和说明技巧解析 07.18

- 豆包AI怎么调试程序 豆包AI程序调试步骤 07.18

- MicrosoftOfficeExcel怎么进行数据分析 07.18

- 豆包回答不准确怎么提高准确率的提问技巧分享 07.18

- 用豆包AI生成Python游戏开发代码 07.18

- 豆包AI编程操作教程 豆包AI自动编程方法 07.18

- EasyEdge:PaddleX 2.0动态图检测模型的部署 07.18

- PointPillars:基于点云的3D快速目标检测网络 07.18

热门推荐

更多 热门文章

更多 -

- 神角技巧试炼岛高级宝箱在什么位置

-

2021-11-05 11:52

手游攻略

-

- 王者荣耀音乐扭蛋机活动内容奖励详解

-

2021-11-19 18:38

手游攻略

-

- 坎公骑冠剑11

-

2021-10-31 23:18

手游攻略

-

- 原神卡肉是什么意思

-

2022-06-03 14:46

游戏资讯

-

- 《臭作》之100%全完整攻略

-

2025-06-28 12:37

单机攻略