一文搞懂Paddle2.0中的优化器

时间:2025-07-23 作者:游乐小编

本项目围绕深度学习优化器展开,通过复现理解其原理。使用蜜蜂黄蜂分类数据集(含4类共7939张图),测试了Paddle2.0自带的多种优化器,对比性能,前5优为momentum、adamax、lamb等,还自定义了QHM优化器,为优化器选择提供参考。

项目简介

对于深度学习中的优化器我们向来采用的是“拿来主义”,即只要拿来然后根据API的参数来修改使用就可以了,或者在“炼丹”时根据经验尝试不同的优化器来给模型“涨点”。古语说得好,“纸上得来终觉浅,绝知此事要躬行”,要想真正的理解优化器-深度学习中的一个调参核心,最好的办法就是通过复现掌握优化器算法的原理。在本项目中我们将从三个不同方面来理解优化器:

学会Paddle2.0中自带的优化器API的使用方法。分析不同优化器的性能优劣。尝试通过自定义优化器来复现目前Paddle中没有的优化器算法。那么什么是优化器呢?

我们知道,深度学习的目标是找到可以满足我们任务的函数,而要找到这个函数就需要确定这个函数的参数。为了达到这个目的我们需要首先设计 一个损失函数,然后让我们的数据输入到待确定参数的备选函数中时这个损失函数可以有最小值。所以,我们就要不断的调整备选函数的参数值,这个调整的过程就是所谓的梯度下降。而如何调整的算法就是优化器算法。由此可见,优化器是深度学习中非常重要的一个概念。值得庆幸的是,深度学习框架Paddle提供了很多可以拿来就用的优化器函数。本项目就来研究一下这些Paddle中的优化器函数。对于想要自力更生设计优化器函数的同学,本项目也提供了一个自定义的优化器函数供参考交流。

项目中用到的数据集

本项目使用的数据集是蜜蜂黄蜂分类数据集。包含4个类别:蜜蜂、黄蜂、其它昆虫和其它类别。 共7939张图片,其中蜜蜂3183张,黄蜂4943张,其它昆虫2439张,其它类别856张

解压缩数据集

In [ ]!unzip -q data/data65386/beesAndwasps.zip -d work/dataset登录后复制

查看图片

In [101]import osimport randomfrom matplotlib import pyplot as pltfrom PIL import Imageimgs = []paths = os.listdir('work/dataset')for path in paths: img_path = os.path.join('work/dataset', path) if os.path.isdir(img_path): img_paths = os.listdir(img_path) img = Image.open(os.path.join(img_path, random.choice(img_paths))) imgs.append((img, path))f, ax = plt.subplots(2, 3, figsize=(12,12))for i, img in enumerate(imgs[:]): ax[i//3, i%3].imshow(img[0]) ax[i//3, i%3].axis('off') ax[i//3, i%3].set_title('label: %s' % img[1])plt.show()plt.show()登录后复制 登录后复制

数据预处理

In [ ]!python code/preprocess.py登录后复制

finished data preprocessing登录后复制

Paddle2.0中自带的优化器

使用SGD优化器

使用方法为:class paddle.optimizer.SGD(learning_rate=0.001, parameters=None, weight_decay=None, grad_clip=None, name=None)

该接口实现随机梯度下降算法,为网络添加反向计算过程,并根据反向计算所得的梯度,更新parameters中的Parameters,最小化网络损失值loss。

该优化器可以说是最简单的优化器算法了。

更新公式如下:

In [ ]

In [ ]## 开始训练!python code/train.py --optim 'sgd'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0123 23:32:08.546725 7181 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0123 23:32:08.552091 7181 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.6625945317044794 at epoch 0best acc is 0.7766143106457243 at epoch 1best acc is 0.852239674229203 at epoch 2best acc is 0.8702734147760326 at epoch 9登录后复制

可视化结果

图1 SGD训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'sgd'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 00:10:28.273605 10381 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 00:10:28.279044 10381 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 0.6073 - acc: 0.8656 - 29ms/step Eval samples: 1763test accuracy is 0.8655700510493477登录后复制

使用Momentum优化器

使用方法为:paddle.optimizer.Momentum(learning_rate=0.001, momentum=0.9, parameters=None, use_nesterov=False, weight_decay=None, grad_clip=None, name=None)

该接口实现含有速度状态的Simple Momentum 优化器

该优化器含有牛顿动量标志:

更新公式如下:

In [ ]

In [ ]## 开始训练!python code/train.py --optim 'momentum'登录后复制

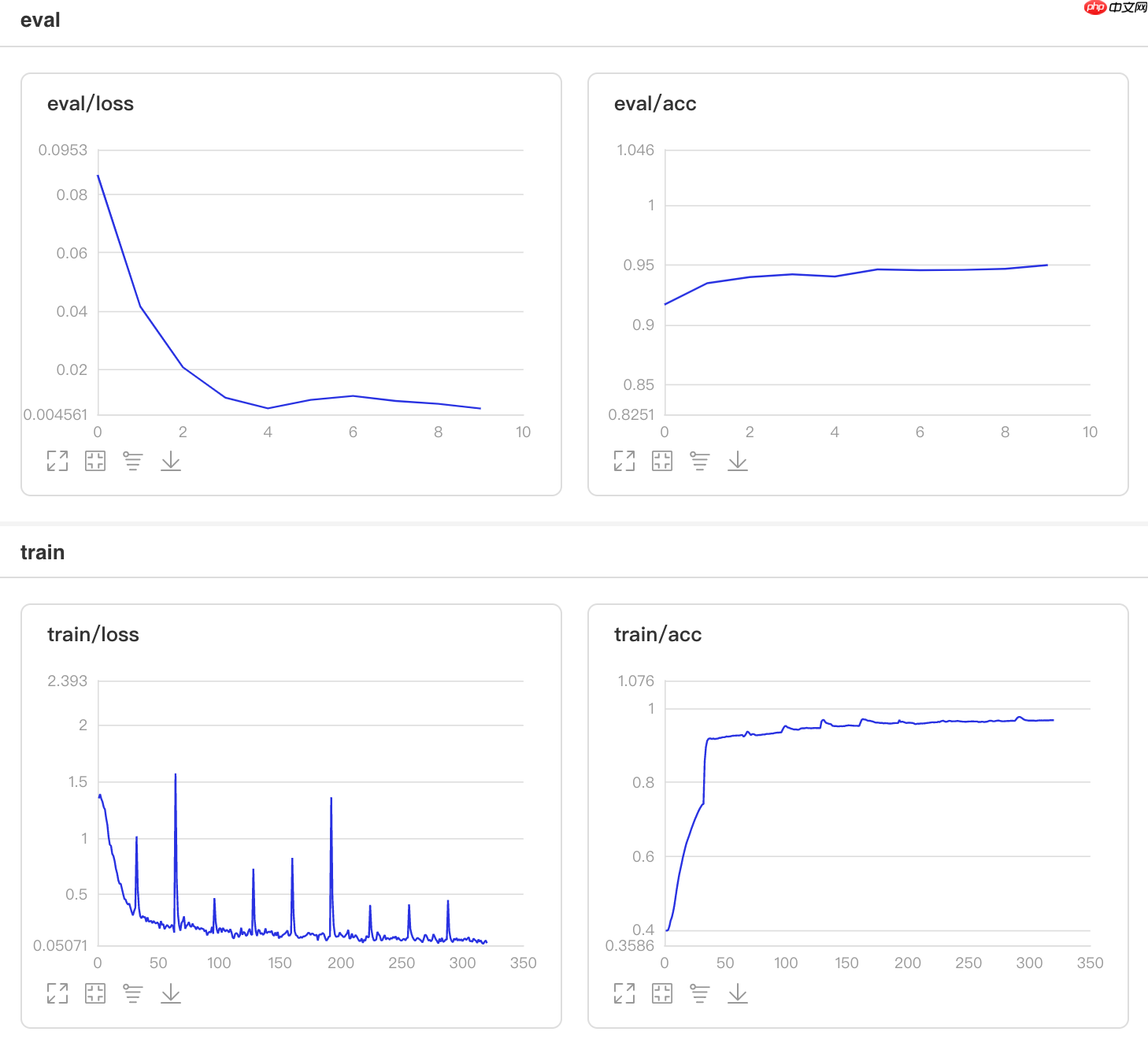

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 00:14:04.627827 10669 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 00:14:04.633363 10669 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.9168121000581734 at epoch 0best acc is 0.9400814426992438 at epoch 1best acc is 0.941826643397324 at epoch 2best acc is 0.9429901105293775 at epoch 3best acc is 0.9488074461896452 at epoch 5best acc is 0.9511343804537522 at epoch 9登录后复制

可视化结果

图2 Momentum训练验证图

In [ ]## 查看测试集上的效果!python work/code.py --optim 'momentum'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 00:45:03.963804 12825 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 00:45:03.969125 12825 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 0.0095 - acc: 0.9552 - 29ms/step Eval samples: 1763test accuracy is 0.9551900170164492登录后复制

使用RMSProp优化器

使用方法为:paddle.optimizer.RMSProp(learning_rate, rho=0.95, epsilon=1e-06, momentum=0.0, centered=False, parameters=None, weight_decay=None, grad_clip=None, name=None)

该接口实现均方根传播(RMSProp)法,是一种未发表的,自适应学习率的方法。

原演示幻灯片中提出了RMSProp:[https://www.cs.toronto.edu/~tijmen/csc321/slides/lecture_slides_lec6.pdf]中的第29张。

In [ ]## 开始训练!python code/train.py --optim 'rmsprop'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 00:47:18.013767 13118 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 00:47:18.019198 13118 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.5770796974985457 at epoch 3登录后复制

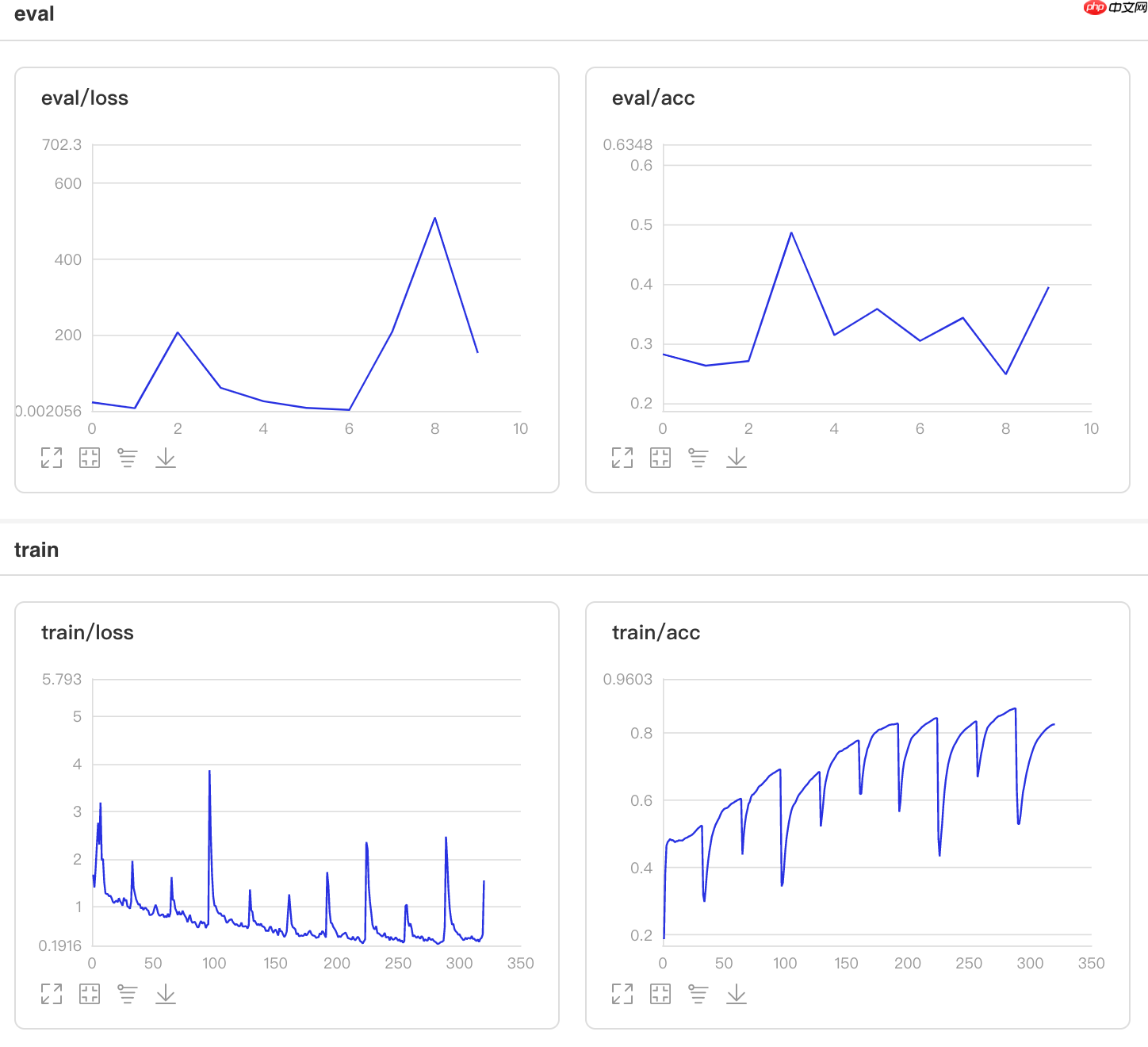

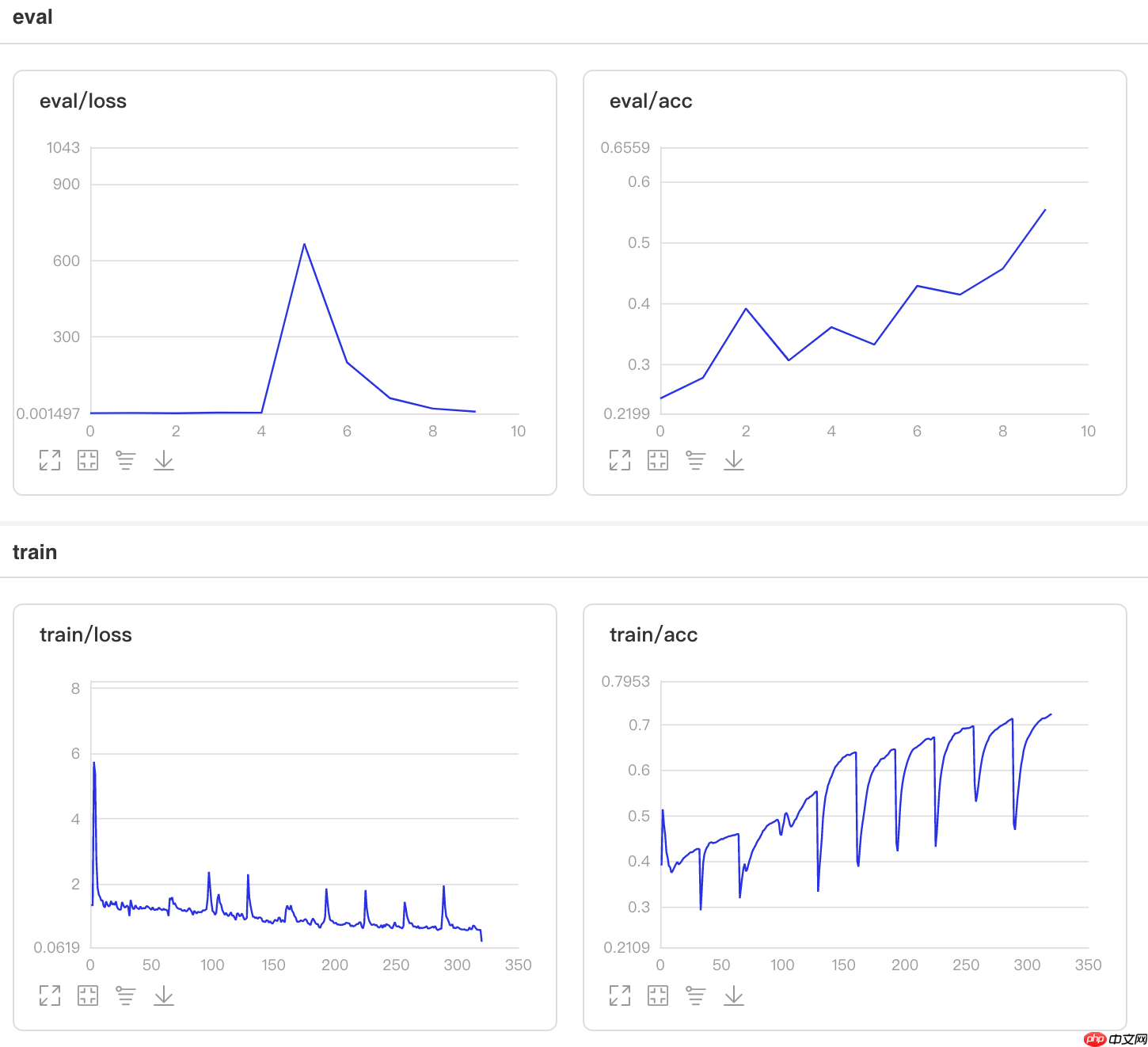

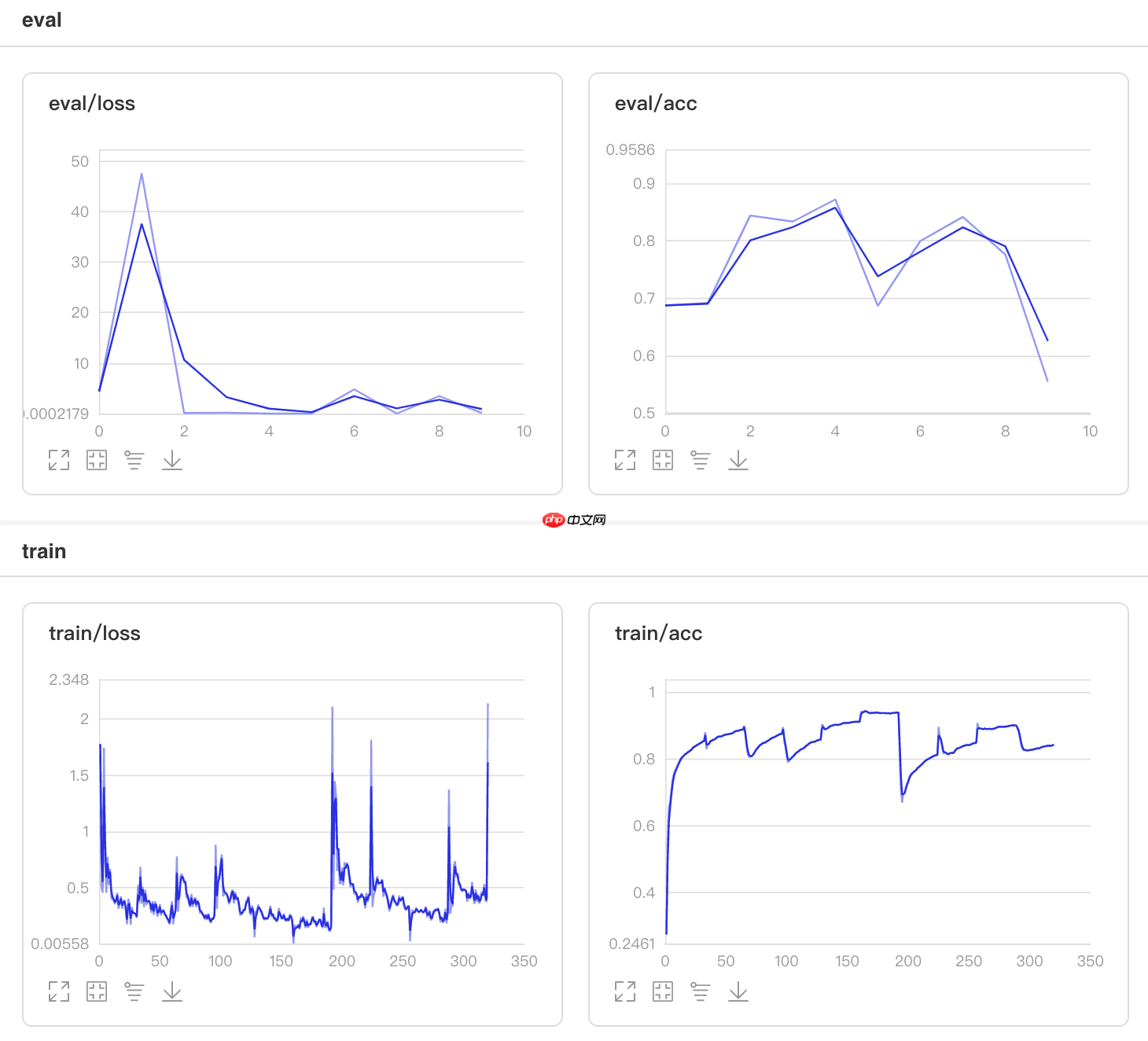

可视化结果

图3 RMSProp训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'rmsprop'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 01:53:07.772697 16390 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 01:53:07.778218 16390 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 3.4743e-04 - acc: 0.5706 - 29ms/step Eval samples: 1763test accuracy is 0.5706182643221781登录后复制

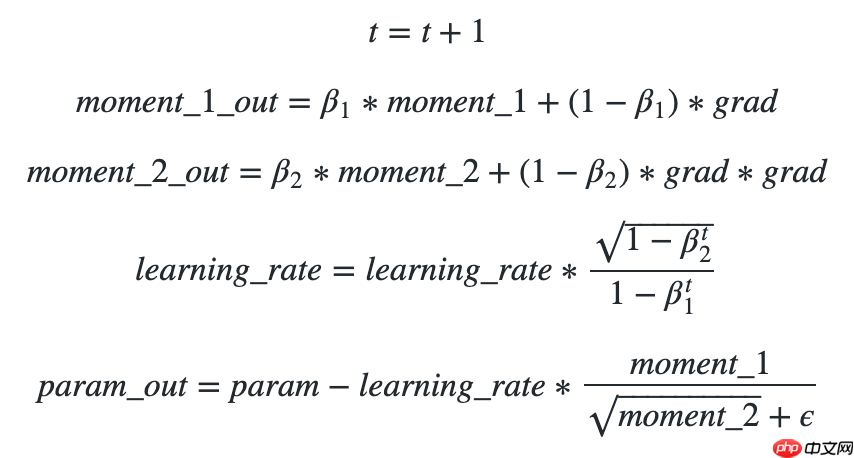

使用Adam优化器

使用方法为:paddle.optimizer.Adam(learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-08, parameters=None, weight_decay=None, grad_clip=None, name=None, lazy_mode=False)

Adam优化器, 能够利用梯度的一阶矩估计和二阶矩估计动态调整每个参数的学习率。具体细节可参考论文Adam: A Method for Stochastic Optimization

更新公式如下:

In [ ]

In [ ]## 开始训练!python code/train.py --optim 'adam'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 01:55:00.722407 16553 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 01:55:00.727862 16553 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.7702152414194299 at epoch 0best acc is 0.8743455497382199 at epoch 1登录后复制

可视化结果

图4 Adam训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'adam'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 02:20:28.366967 18377 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 02:20:28.372236 18377 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 1.4747 - acc: 0.8775 - 29ms/step Eval samples: 1763test accuracy is 0.8774815655133296登录后复制

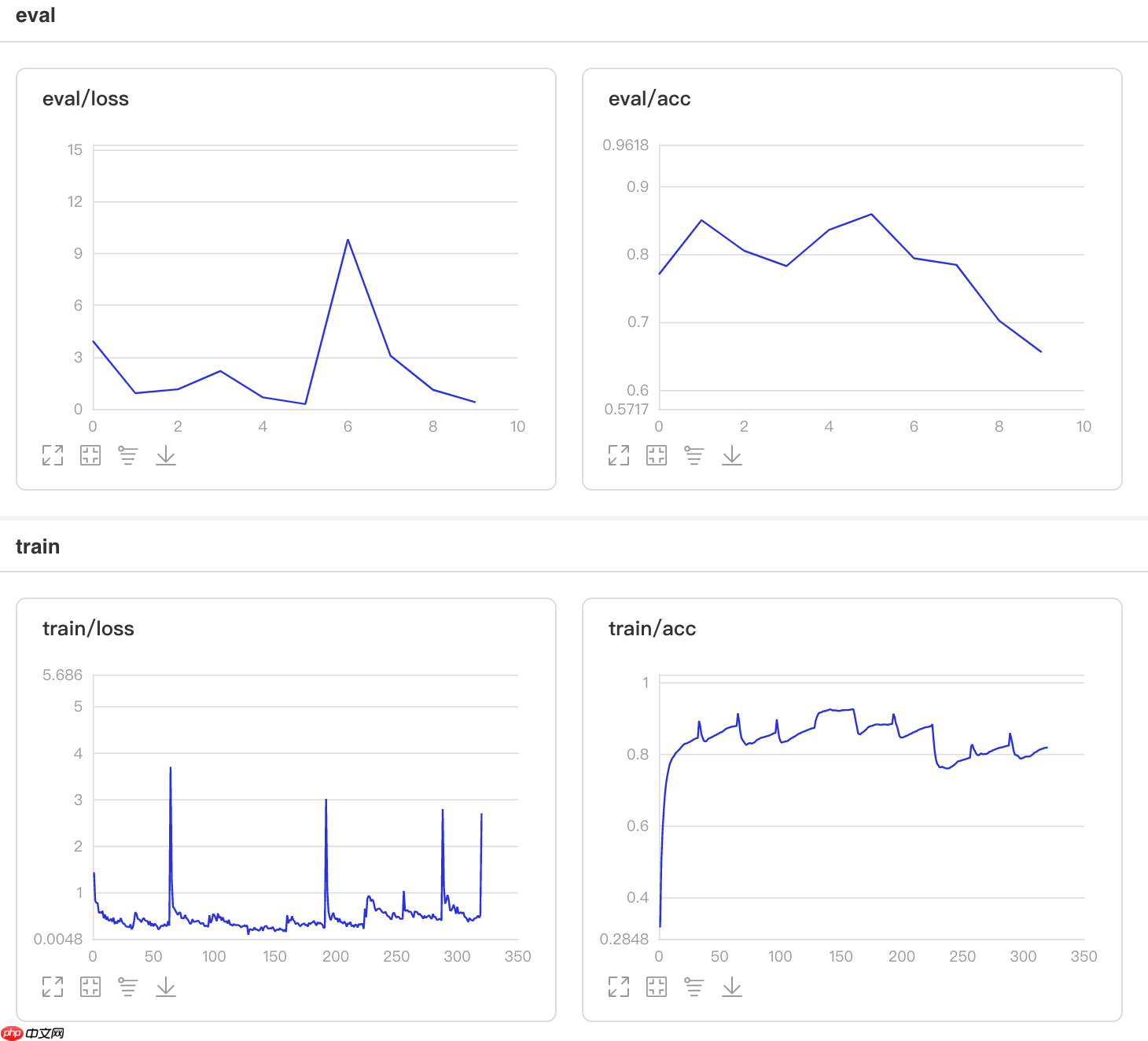

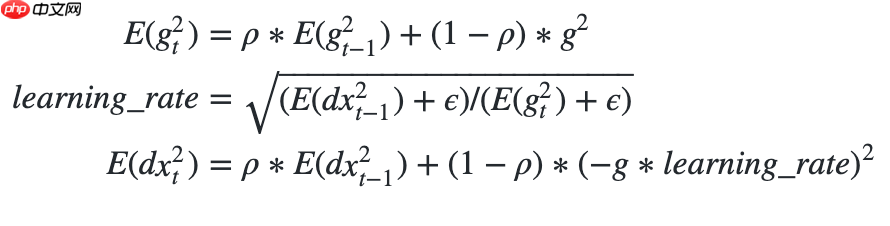

使用Adadelta优化器

使用方法为:paddle.optimizer.Adadelta(learning_rate=0.001, epsilon=1e-06, rho=0.95, parameters=None, weight_decay=0.01, grad_clip=None, name=None)

Adadelta优化器,具体细节可参考论文 ADADELTA: AN ADAPTIVE LEARNING RATE METHOD 。

更新公式如下:

In [ ]

In [ ]## 开始训练!python code/train.py --optim 'adadelta'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 02:21:28.123239 18537 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 02:21:28.128479 18537 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.5962769051774287 at epoch 9登录后复制

可视化结果

图5 Adadelta训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'adadelta'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 02:47:05.152071 19699 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 02:47:05.157275 19699 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 2.5784 - acc: 0.6086 - 28ms/step Eval samples: 1763test accuracy is 0.608621667612025登录后复制

使用Adagrad优化器

使用方法为:paddle.optimizer.Adagrad(learning_rate=0.001, epsilon=1e-06, rho=0.95, parameters=None, weight_decay=0.01, grad_clip=None, name=None)

Adadelta优化器,具体细节可参考论文 Adaptive Subgradient Methods for Online Learning and Stochastic Optimization 。

## 开始训练!python code/train.py --optim 'adagrad'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 02:48:02.785794 19793 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 02:48:02.791139 19793 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.595695171611402 at epoch 1best acc is 0.9139034322280396 at epoch 2best acc is 0.939499709133217 at epoch 5登录后复制

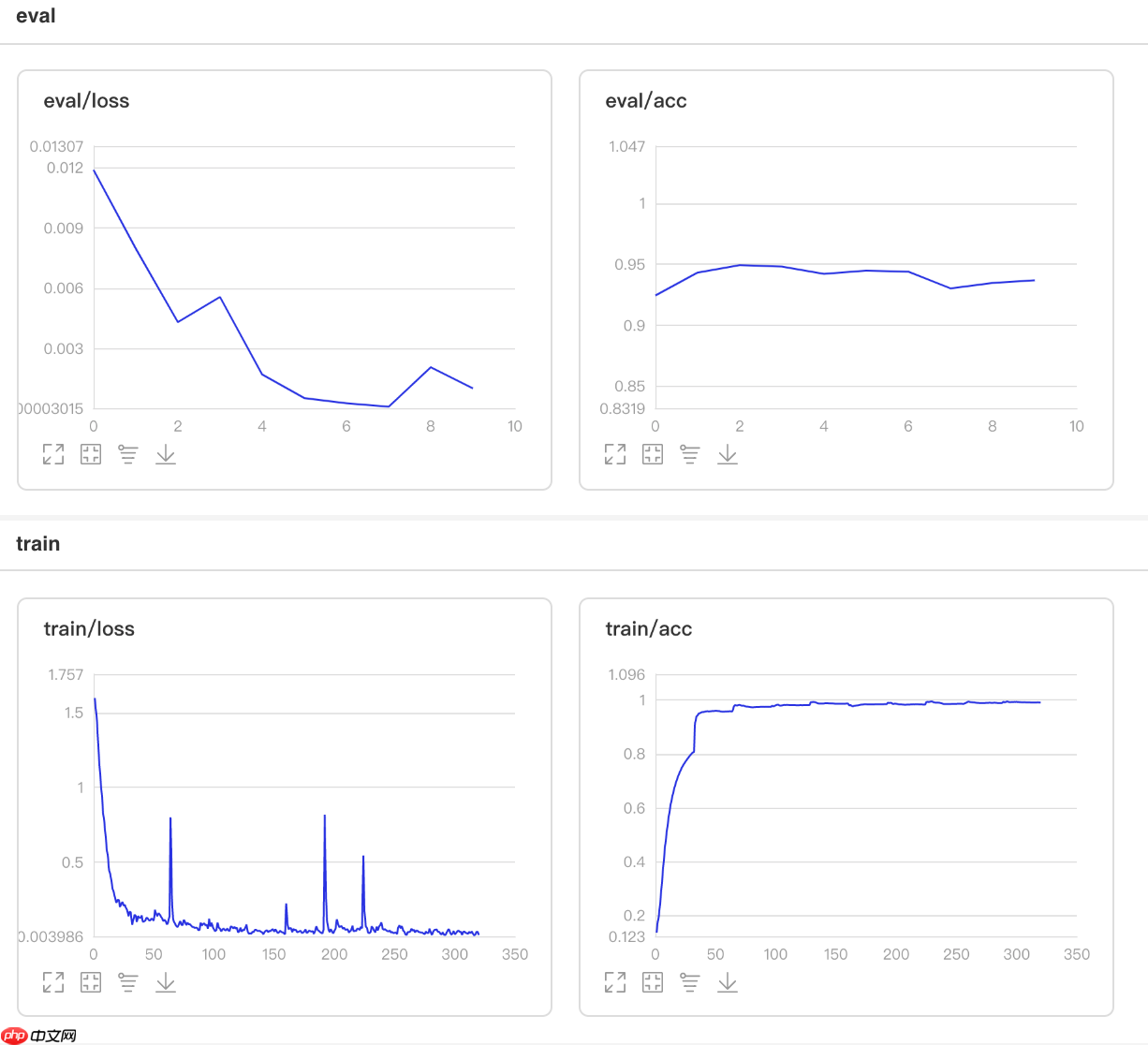

可视化结果

图6 Adagrad训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'adagrad'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 03:12:55.165827 20790 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 03:12:55.170956 20790 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 1.0373 - acc: 0.9410 - 27ms/step Eval samples: 1763test accuracy is 0.9410096426545661登录后复制

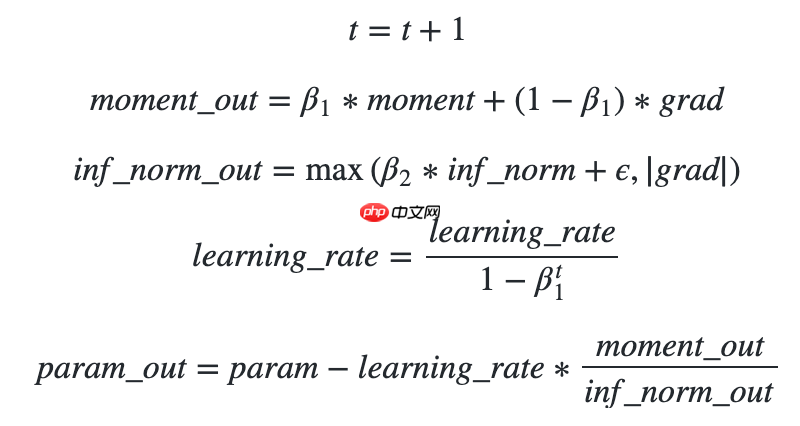

使用Adamax优化器

使用方法为:paddle.optimizer.Adamax(learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-08, parameters=None, weight_decay=None, grad_clip=None, name=None)

Adamax优化器,是参考Adam论文第7节Adamax优化相关内容所实现的。Adamax算法是基于无穷大范数的 Adam 算法的一个变种,使学习率更新的算法更加稳定和简单。

更新公式如下:

In [ ]

In [ ]## 开始训练!python code/train.py --optim 'adamax'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 03:13:51.198694 20886 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 03:13:51.203987 20886 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.9226294357184409 at epoch 0best acc is 0.9383362420011635 at epoch 1best acc is 0.9453170447934846 at epoch 2best acc is 0.9458987783595113 at epoch 3best acc is 0.9493891797556719 at epoch 5best acc is 0.951716114019779 at epoch 7登录后复制

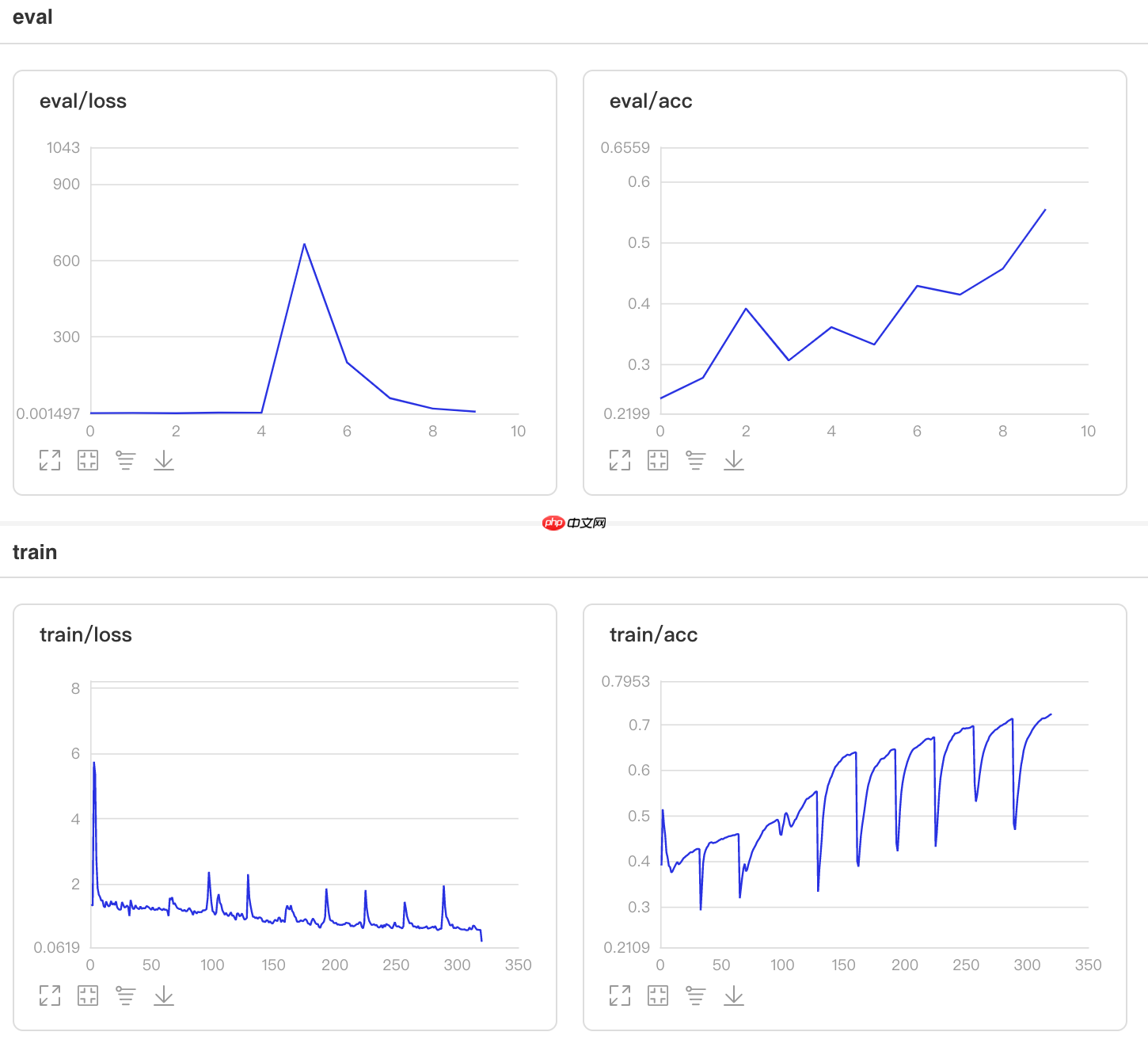

可视化结果

图7 Adamax训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'adamax'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 03:39:11.441298 22709 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 03:39:11.446803 22709 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 0.0516 - acc: 0.9461 - 27ms/step Eval samples: 1763test accuracy is 0.9461145774248441登录后复制

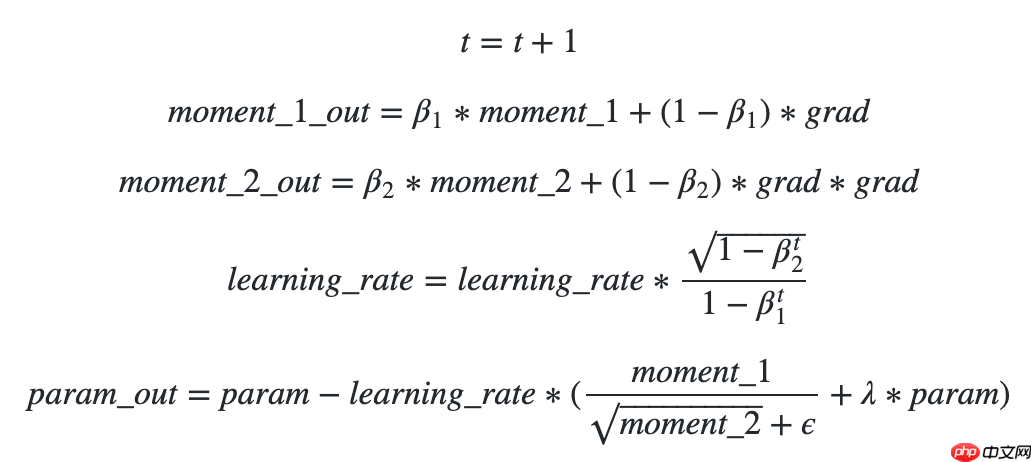

使用AdamW优化器

使用方法为:paddle.optimizer.AdamW(learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-08, parameters=None, weight_decay=0.01, apply_decay_param_fun=None, grad_clip=None, lazy_mode=False, name=None)

AdamW优化器用来解决Adam优化器中L2正则化失效的问题。具体细节可参考论文DECOUPLED WEIGHT DECAY REGULARIZATION

更新公式如下:

In [ ]

In [ ]## 开始训练!python code/train.py --optim 'adamw'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 03:40:07.584594 22837 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 03:40:07.589939 22837 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.6870273414776032 at epoch 0best acc is 0.6910994764397905 at epoch 1best acc is 0.8435136707388017 at epoch 2best acc is 0.8714368819080861 at epoch 4登录后复制

可视化结果

图8 AdamW训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'adamw'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 04:05:06.006824 24628 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 04:05:06.012018 24628 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 1.9632e-04 - acc: 0.8769 - 26ms/step Eval samples: 1763test accuracy is 0.8769143505388542登录后复制

使用Lamb优化器

使用方法为:paddle.optimizer.Lamb(learning_rate=0.001, lamb_weight_decay=0.01,beta1=0.9, beta2=0.999, epsilon=1e-06,parameters=None, grad_clip=None, name=None)

Lamb优化器用来解决Batch_size较大时准确率较低的问题。具体细节可参考论文Large Batch Optimization for Deep Learning: Training BERT in 76 minutes

## 开始训练!python code/train.py --optim 'lamb'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 21:16:25.052958 26375 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 21:16:25.058046 26375 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.9243746364165212 at epoch 0best acc is 0.9488074461896452 at epoch 1best acc is 0.951716114019779 at epoch 2登录后复制

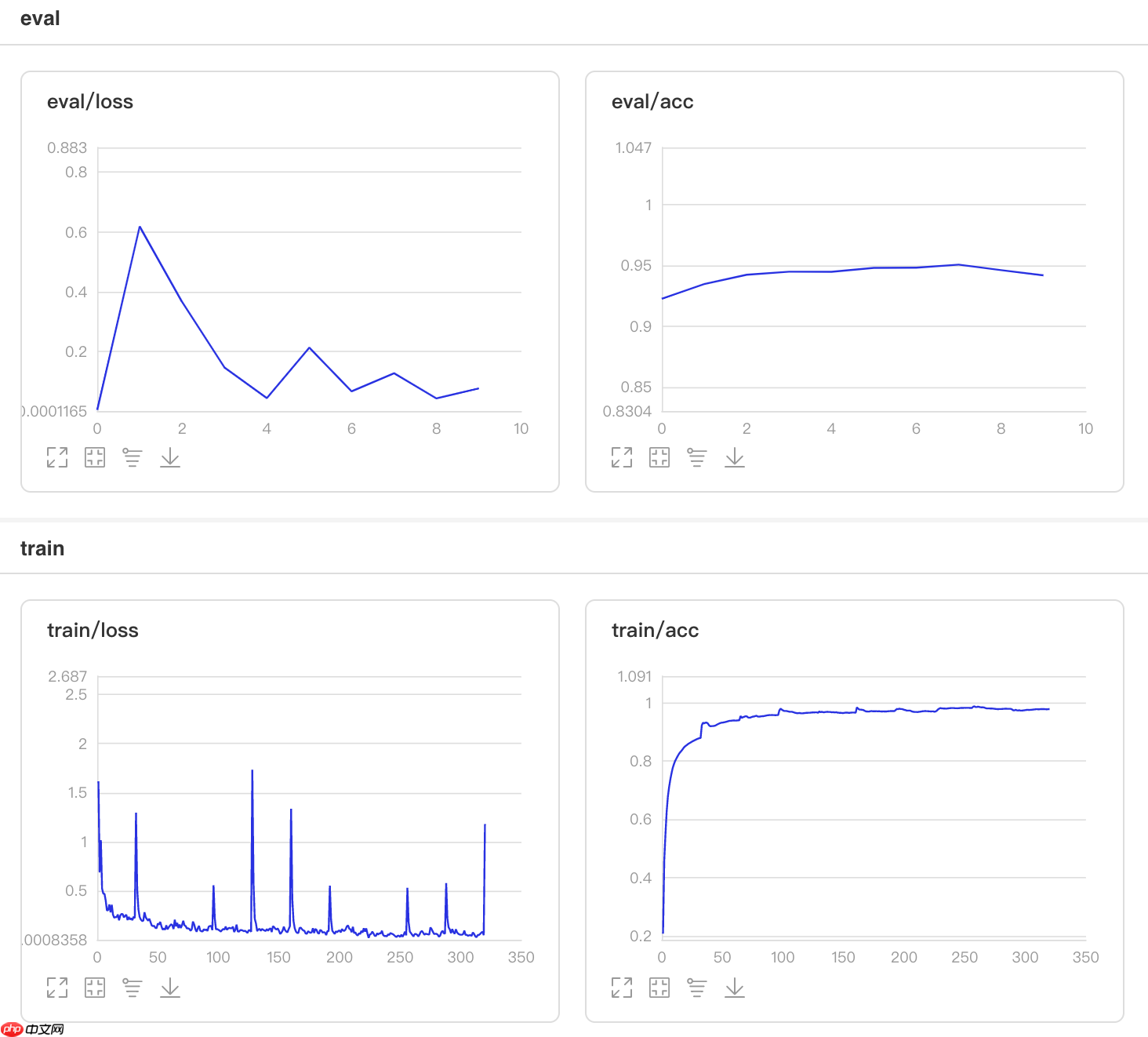

可视化结果

图9 Lamb训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'lamb'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0124 21:45:04.622473 28401 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0124 21:45:04.627763 28401 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 0.0074 - acc: 0.9546 - 26ms/step Eval samples: 1763test accuracy is 0.9546228020419739登录后复制

使用LookAhead优化器

使用方法为: optimizer=paddle.optimizer.SGD(learning_rate=0.001, lamb_weight_decay=0.01,beta1=0.9, beta2=0.999, epsilon=1e-06,parameters=None, grad_clip=None, name=None) lookahead = LookAhead(optimizer, alpha=0.2, k=5)

LookAhead可以和任何已有的优化器结合,通过更新已有优化器产生的权重来加快收敛速度。LookAhead的性能也取决于所选择的优化器。具体细节可参考论文Lookahead Optimizer: k steps forward, 1 step back

注意:目前该方法需要自己使用lookahead.py文件import后使用。

In [ ]## 开始训练!python code/train.py --optim 'lookahead'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0126 10:31:56.395859 16515 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0126 10:31:56.401016 16515 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.7777777777777778 at epoch 0best acc is 0.8662012798138452 at epoch 1best acc is 0.8865619546247818 at epoch 2best acc is 0.9150668993600931 at epoch 3best acc is 0.9197207678883071 at epoch 4best acc is 0.9232111692844677 at epoch 5best acc is 0.9336823734729494 at epoch 6best acc is 0.93717277486911 at epoch 7best acc is 0.9424083769633508 at epoch 8登录后复制

可视化结果

图10 LookAhead训练验证图

In [ ]## 查看测试集上的效果!python code/test.py --optim 'lookahead'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0126 11:02:31.468194 18739 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0126 11:02:31.473600 18739 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 0.2146 - acc: 0.9365 - 27ms/step Eval samples: 1763test accuracy is 0.9364719228587635登录后复制

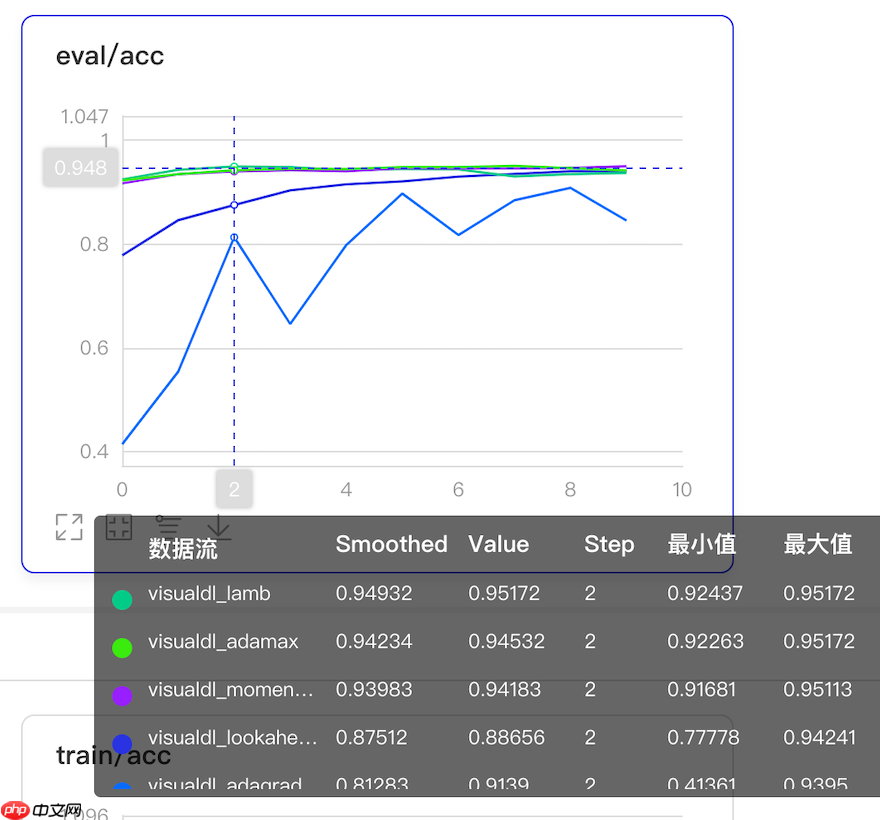

Paddle2.0中在本项目上准确率最高的前5种优化器性能比较

从下图中可以看出,在10个epoch的情况下,性能最优的前5种中,momentum、adamax和lamb性能又是最优的,这也给我们在深度学习训练中选择优化器的方案提供了有价值的参考。

图11 前5种性能最优的优化器性能比较图

自定义优化器算法

Paddle2.0中提供了优化器算法基类paddle.optimizer.Optimizer,使用该基类我们可以自定义优化器算法。这里要注意的是该基类中的方法_append_optimize_op一定要定义。

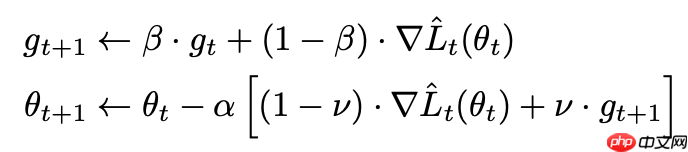

自定义优化器算法QHM

QHM方法来自于论文Quasi-hyperbolic momentum and Adam for deep learning

根据论文的描述,QHM相对于Momentum只是有少量的改变,主要是改变了参数的更新规则。这也让复现变的稍微简单。

更新公式如下:

In [88]

In [88]## 开始训练!python code/train.py --optim 'qhm'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0127 00:36:50.276679 17048 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0127 00:36:50.282220 17048 device_context.cc:330] device: 0, cuDNN Version: 7.6.best acc is 0.5625363583478766 at epoch 0best acc is 0.7393833624200117 at epoch 1best acc is 0.8237347294938918 at epoch 2best acc is 0.8504944735311227 at epoch 3best acc is 0.8702734147760326 at epoch 4best acc is 0.8830715532286213 at epoch 5best acc is 0.8999418266433973 at epoch 6best acc is 0.9057591623036649 at epoch 7best acc is 0.9109947643979057 at epoch 8best acc is 0.9214659685863874 at epoch 9登录后复制

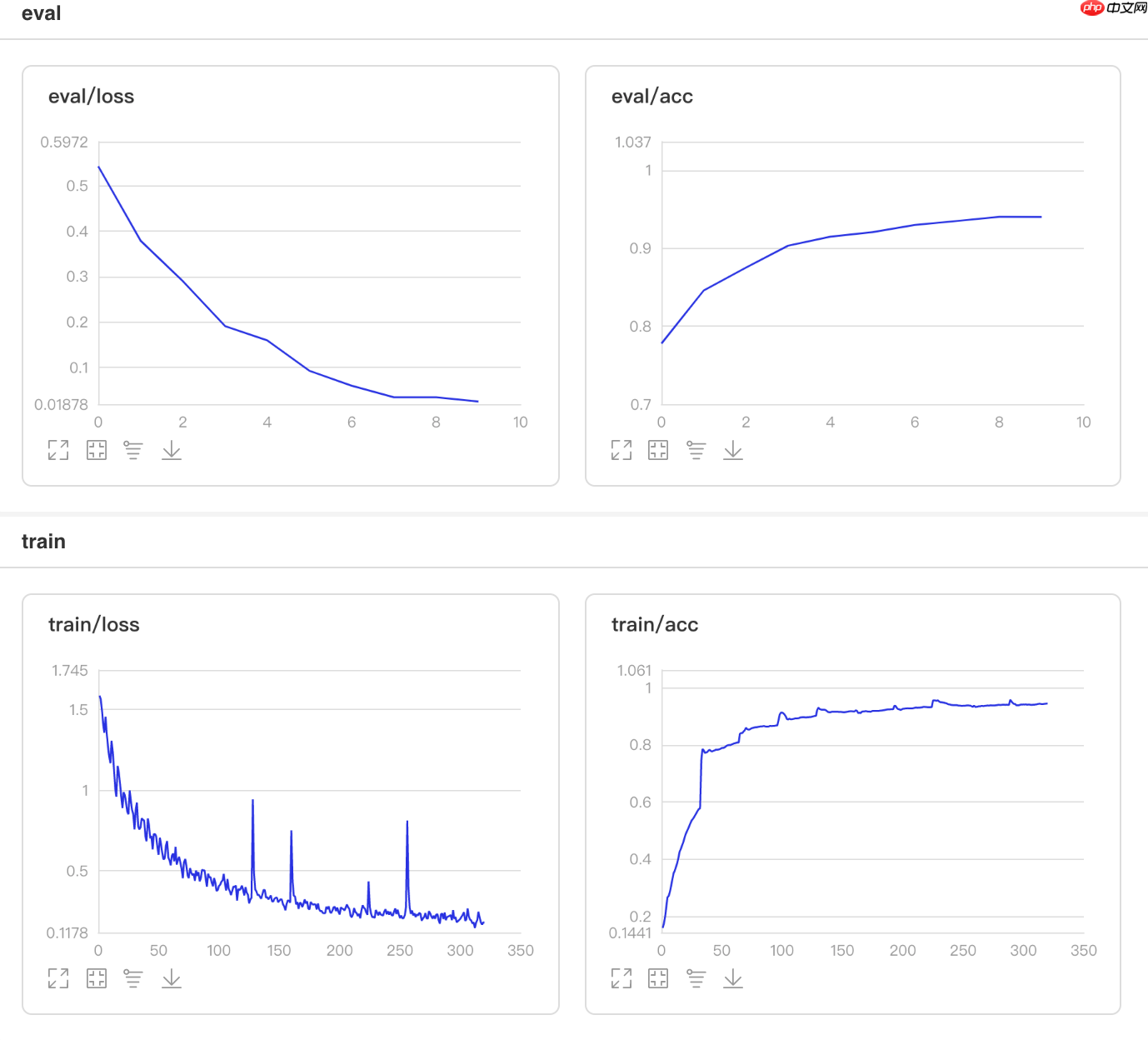

可视化结果

图12 QHM训练验证图

In [89]## 查看测试集上的效果!python code/test.py --optim 'qhm'登录后复制

You are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. Ignore this if TensorRT is not needed.W0127 01:12:05.822453 18214 device_context.cc:320] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 10.1, Runtime API Version: 10.1W0127 01:12:05.827925 18214 device_context.cc:330] device: 0, cuDNN Version: 7.6.Eval begin...The loss value printed in the log is the current batch, and the metric is the average value of previous step.step 1763/1763 [==============================] - loss: 0.8750 - acc: 0.9115 - 29ms/step Eval samples: 1763test accuracy is 0.9115144639818491登录后复制

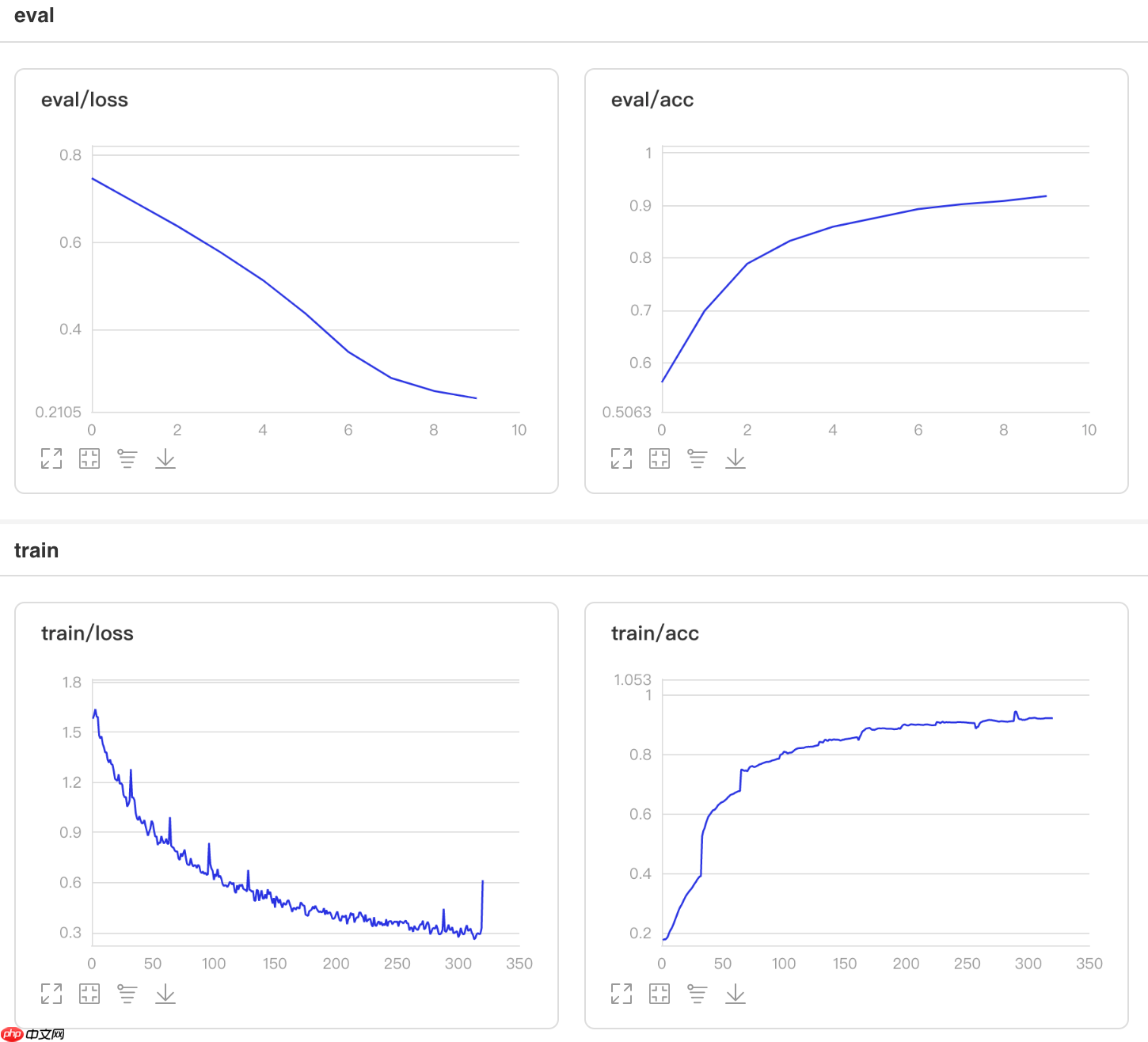

结果比较

本项目中所有优化器的性能比较如下:

图13 优化器性能比较

小编推荐:

相关攻略

更多 - Office文档修复神器 损坏文件抢救终身免费 07.23

- 【新手入门】0 基础掌握大模型训练(一):监督微调SFT算法全解析:从原理到实战 07.23

- PaddleRS:geojson-建筑提取 07.23

- Gemini如何接入智能家居 Gemini智能设备控制方案 07.23

- 豆包AI编程操作说明 豆包AI自动编程技巧 07.23

- AdvSemiSeg: 利用生成对抗实现半监督语义分割 07.23

- pdf转word文档怎么转?五大高效方法全攻略! 07.23

- 基于PPSeg框架的HRNet_W48_Contrast复现 07.23

热门推荐

更多 热门文章

更多 -

- 神角技巧试炼岛高级宝箱在什么位置

-

2021-11-05 11:52

手游攻略

-

- 王者荣耀音乐扭蛋机活动内容奖励详解

-

2021-11-19 18:38

手游攻略

-

- 坎公骑冠剑11

-

2021-10-31 23:18

手游攻略

-

- 原神卡肉是什么意思

-

2022-06-03 14:46

游戏资讯

-

- 《臭作》之100%全完整攻略

-

2025-06-28 12:37

单机攻略