【全民动起来】反向卷腹AI计数器

时间:2025-07-23 作者:游乐小编

本文介绍基于PaddleHub的反向卷腹AI计数器。因健身时手动计数易出错,利用human_pose_estimation_resnet50_mpii模型实现计数。通过检测人体关键点,以膝盖x轴坐标变化为依据,判断反向卷腹完成情况。还给出环境准备、检测示例及计数代码,测试显示能准确计数,生成带检测效果的视频。

一、 【基于PaddleHub的反向卷腹AI计数器】

练腹只做仰卧起坐?做太多可能伤你的背!试试反向卷腹吧!更安全

1.背景介绍

自从刘耕宏大哥的直播健身流行,引起了全民健身的热潮~

一边运动的时候一边还要数着自己做到第几个才能达标,但是偶尔会数错

为了针对做的时候不要再操心计数的问题,利用PaddleHub的做了个反向卷腹AI计数器。

AI帮你反向卷腹计数

2.实现思路

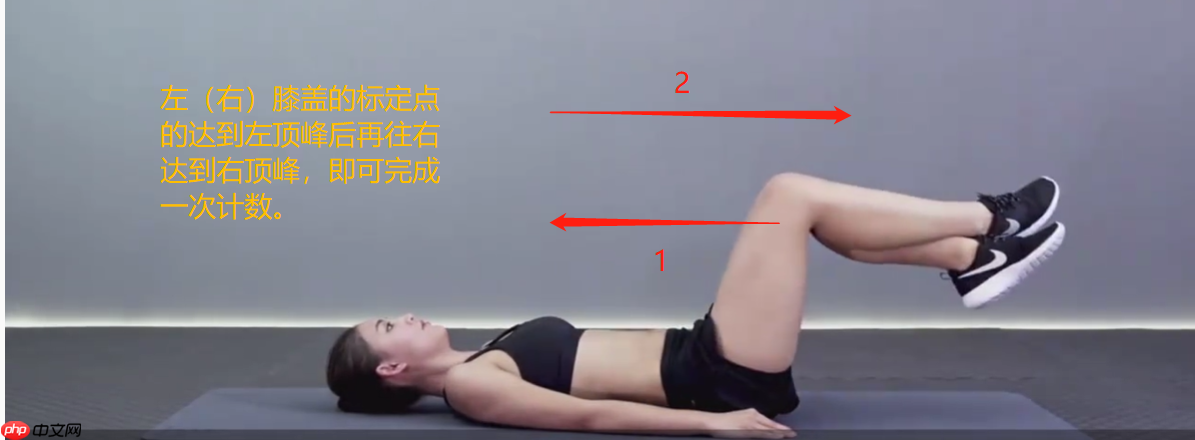

1.用户打开手机,根据提示调整身体与手机距离,直到人体完全位于识别框内,即可开始运动。2.通过PaddleHub的human_pose_estimation_resnet50_mpii模型,进行人体关键点检测。3.根据检测的数据计数(此处选择左(右)膝盖关键点进行判断,一次完整的左右来往为一次有效的计数)二、环境准备

1.PaddleHub安装

In [1]!pip install -U pip --user >log.log!pip install -U paddlehub >log.log登录后复制 In [2]

!pip list |grep paddle登录后复制

2.human_pose_estimation_resnet50_mpii模型安装

模型地址: https://www.paddlepaddle.org.cn/hubdetail?name=human_pose_estimation_resnet50_mpii&en_category=KeyPointDetection模型概述:人体骨骼关键点检测(Pose Estimation) 是计算机视觉的基础性算法之一,在诸多计算机视觉任务起到了基础性的作用,如行为识别、人物跟踪、步态识别等相关领域。具体应用主要集中在智能视频监控,病人监护系统,人机交互,虚拟现实,人体动画,智能家居,智能安防,运动员辅助训练等等。 该模型的论文《Simple Baselines for Human Pose Estimation and Tracking》由 MSRA 发表于 ECCV18,使用 MPII 数据集训练完成。In [3]!hub install human_pose_estimation_resnet50_mpii >log.log登录后复制 In [4]

!hub list|grep human登录后复制

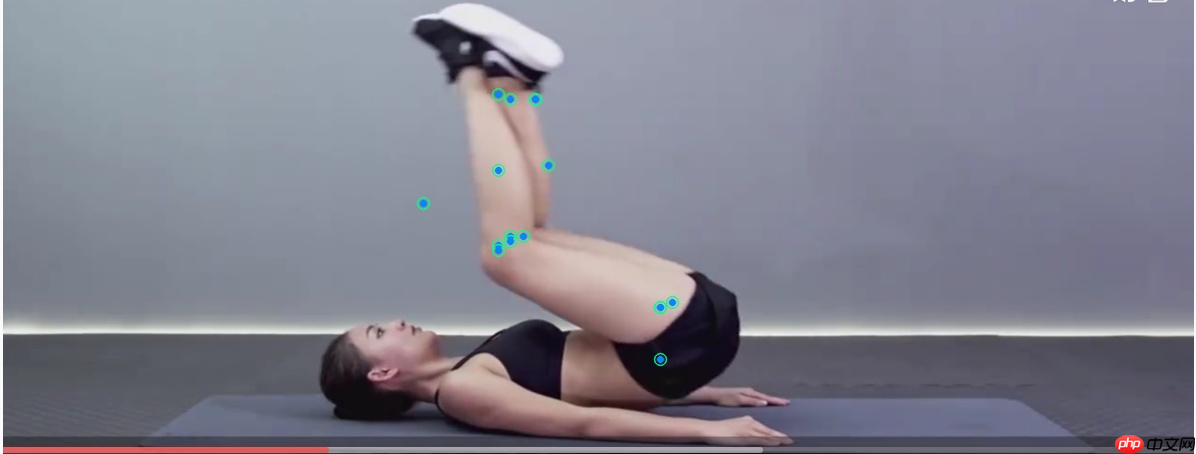

三、人体关键点检测示例

1.关键点检测演示

针对下面这三张图片做关键点检测,具体如下:

import cv2import paddlehub as hubpose_estimation = hub.Module(name="human_pose_estimation_resnet50_mpii")#human_pose_estimation_resnet50_mpiiimage1=cv2.imread('work/ready.png') # 准备状态image2=cv2.imread('work/doing.png') # 中间状态image3=cv2.imread('work/finish.png') #结束状态results = pose_estimation.keypoint_detection(images=[image1,image2,image3], visualization=True)登录后复制 查看output_pose 下输出的图片:

3.如何判断反向卷腹的有效性

判断一次反向卷腹的依据是什么呢?

尽管上面的三张图有些点标定的不是很准确,但是我们可以比较明确的看到值得关注的点,例如膝盖的标定点。用膝盖点的移动可以作为评判标准。

In [29]# 打印三张左右膝盖的关键点 print(results[0]['data']['right_knee'])print(results[1]['data']['right_knee'])print(results[2]['data']['right_knee'])print(results[0]['data']['left_knee'])print(results[1]['data']['left_knee'])print(results[2]['data']['left_knee'])#从结果来看,我们用左膝盖或者右膝盖的点都可登录后复制

[783, 187][498, 250][784, 187][820, 242][498, 245][809, 183]登录后复制

四、智能计数

In [31]import cv2import paddlehub as hubimport mathfrom matplotlib import pyplot as pltimport numpy as npimport osos.environ['CUDA_VISIBLE_DEVICES'] = '0'%matplotlib inlinedef countYwqz(): pose_estimation = hub.Module(name="human_pose_estimation_resnet50_mpii") flag = False count = 0 num = 0 all_num = [] flip_list = [] fps = 60 # 可选择web视频流或者文件 file_name = 'work/fan_juanfu.mp4' cap = cv2.VideoCapture(file_name) width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH)) height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)) fourcc = cv2.VideoWriter_fourcc(*'mp4v') # out后期可以合成视频返回 out = cv2.VideoWriter( 'output.mp4', fourcc, fps, (width,height)) while cap.isOpened(): success, image = cap.read() # print(image) if not success: break image_height, image_width, _ = image.shape # print(image_height, image_width) image.flags.writeable = False results = pose_estimation.keypoint_detection(images=[image], visualization=True, use_gpu=True) flip = results[0]['data']['right_knee'][0] # 获取膝盖的x轴坐标值 flip_list.append(flip) all_num.append(num) num +=1 # 写入视频 img_root="output_pose/" # 排序,不然是乱序的合成出来 im_names=os.listdir(img_root) im_names.sort(key=lambda x: int(x.replace("ndarray_time=","").split('.')[0])) for im_name in range(len(im_names)): img = img_root+str(im_names[im_name]) print(img) frame=cv2.imread(img) out.write(frame) out.release() return all_num,flip_listdef get_count(x,y): count = 0 flag = False count_list = [0] # 记录极值的x值 for i in range(len(y)-1): if y[i] <= y[i + 1] and flag == False: continue elif y[i] >= y[i + 1] and flag == True: continue else: # 防止附近的轻微抖动也被计入数据 if abs(count_list[-1] - y[i]) >200 or abs(count_list[-1] - y[i-1]) >200 or abs(count_list[-1] - y[i-2]) >200 or abs(count_list[-1] - y[i-3]) >200 or abs(count_list[-1] - y[i+1]) >200 or abs(count_list[-1] - y[i+2]) >200 or abs(count_list[-1] - y[i+3]) >200: count = count + 1 count_list.append(y[i]) print(x[i]) flag = not flag return math.floor(count/2) if __name__ == "__main__": x,y = countYwqz() plt.figure(figsize=(8, 8)) count = get_count(x,y) plt.title(f"point numbers: {count}") plt.plot(x, y) plt.show()登录后复制 1. 计数效果如下

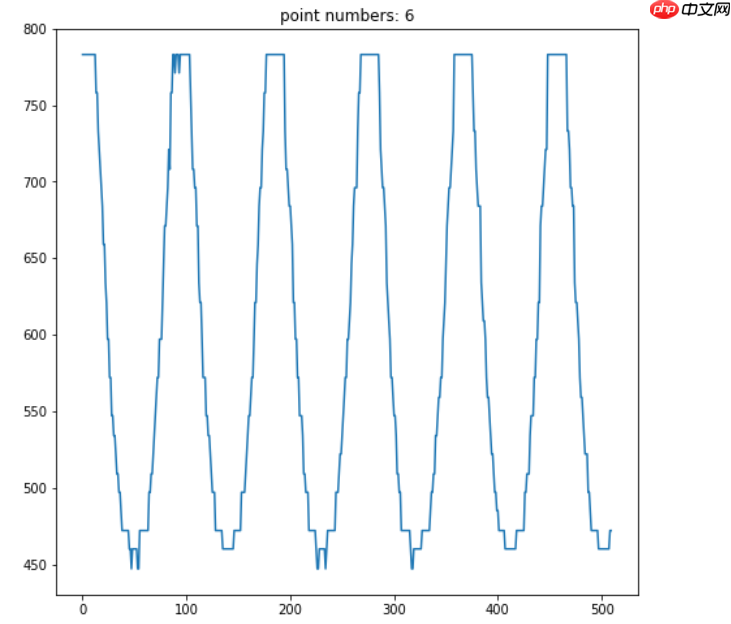

(从图可以看出总共有6个顶峰,对应计数有6个,和原视频总共做了6个反向卷腹对应上了)

2. 视频生成如下

在根目录下可以看到:

output.mp4

- 末日生还者Under AI

- 221.57 MB 时间:04.23

小编推荐:

相关攻略

更多 - 夸克大片院线同步热映更新夸克高清蓝光品质流畅播放 07.23

- 夸克影院韩国电影最新合集热播 夸克影院独家韩剧高清中字资源 07.23

- 夸克龙影院2025热播剧集实时同步 夸克影视4K超清画质无损播放 07.23

- 夸克视频热播剧集每日上新 夸克视频独家内容精彩不间断 07.23

- 夸克影院好莱坞新片同步上线 夸克影院杜比全景声家庭影院体验 07.23

- 夸克影院院线新片抢先点映 夸克影院独家纪录片资源库 07.23

- 夸克影院VIP影视资源免费开放 夸克影院智能推荐个性片单 07.23

- 夸克影院热映大片极速更新 夸克影院杜比音效震撼视听盛宴 07.23

热门推荐

更多 热门文章

更多 -

- 神角技巧试炼岛高级宝箱在什么位置

-

2021-11-05 11:52

手游攻略

-

- 王者荣耀音乐扭蛋机活动内容奖励详解

-

2021-11-19 18:38

手游攻略

-

- 坎公骑冠剑11

-

2021-10-31 23:18

手游攻略

-

- 原神卡肉是什么意思

-

2022-06-03 14:46

游戏资讯

-

- 《臭作》之100%全完整攻略

-

2025-06-28 12:37

单机攻略