【校园AI Day-AI workshop】交通信号标志图像分类

时间:2025-07-20 作者:游乐小编

本文介绍基于PP-PicoDet_LCNet的中国交通标志分类检测项目。通过安装相关环境,处理数据集为COCO格式,用PP-PicoDet模型训练,验证集和测试集mAP(0.5)约98.9%,还进行了模型预测、导出及量化训练等,量化模型精度略有下降。

基于PicoDet_LCNet的中国交通标志图像的分类检测

1. 项目说明

对于交通标志识别系统,其核心作用就是可以准确并及时的识别道路交通标志信息获取当前路况以及行车环境,从而起到提醒和辅助驾驶员对道路信息的把控以及纠正错误交通行为的作用。传统的目标检测算法容易受到多种因素影响导致算法实现困难、识别精度低、识别速率慢等问题。

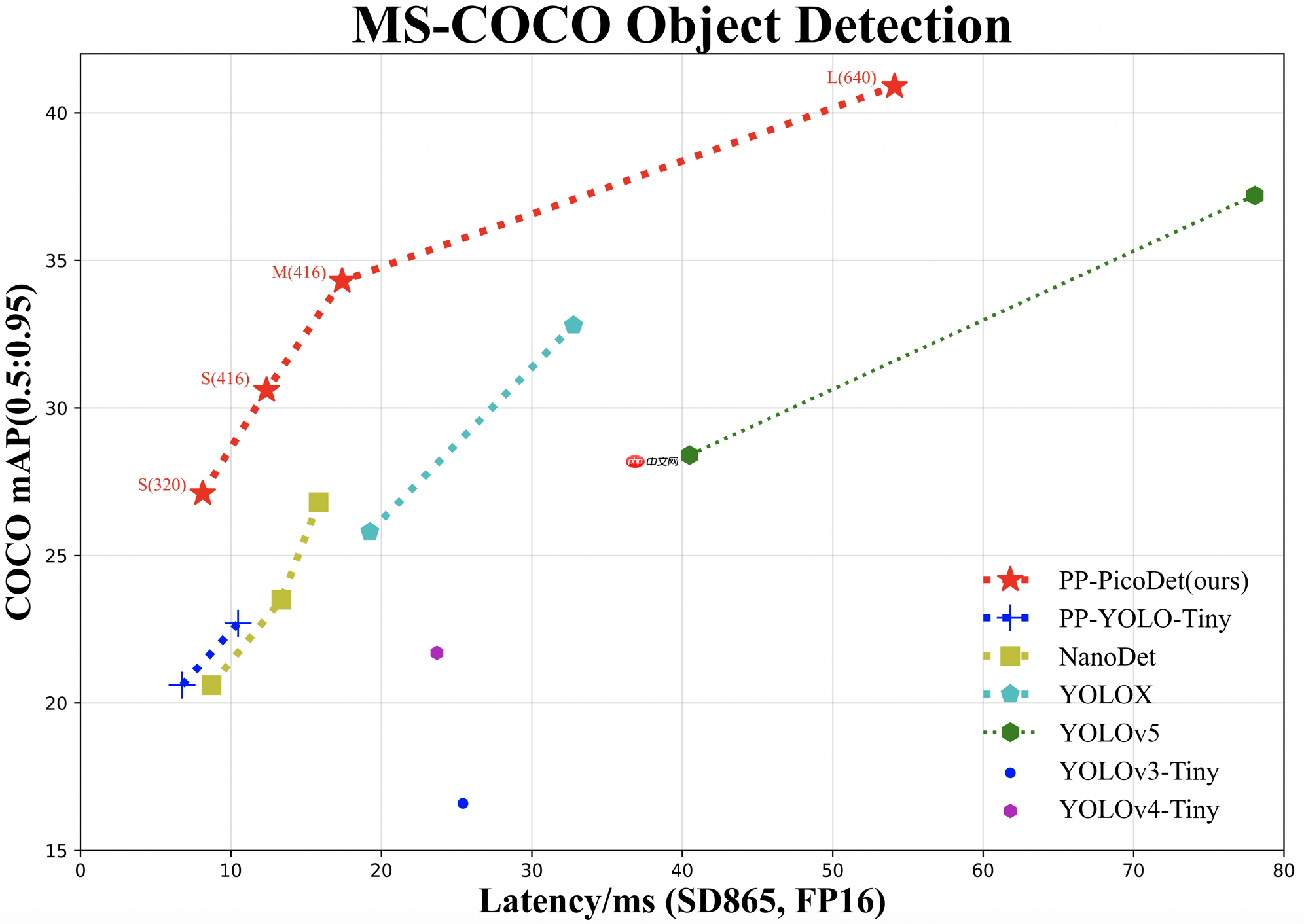

为解决以上问题,最终选用飞桨目标检测开发套件PaddleDetection中的全新的轻量级系列模型PP-PicoDet,该模型在移动端具有卓越的性能,成为全新SOTA轻量级模型。详细的技术细节可以参考arXiv技术报告。模型效果如 图1 所示。

2. 安装说明

2.1 运行环境

PaddlePaddle >= 2.2.2Python >= 3.5PaddleSlim >= 2.2.2PaddleLite >= 2.102.2 解压安装

提供了修改配置文件的PaddleDetection代码以及训练好的模型,解压即可。

In [1]!unzip -q PaddleDetection.zip登录后复制

2.3克隆安装

如果想下载PaddleDetection源码,执行如下命令

In [1]# !git clone https://github.com/PaddlePaddle/PaddleDetection.git -b develop --depth 1# 可使用国内gitee镜像!git clone https://gitee.com/paddlepaddle/PaddleDetection.git登录后复制

正克隆到 'PaddleDetection'...remote: Enumerating objects: 23983, done.remote: Counting objects: 100% (4453/4453), done.remote: Compressing objects: 100% (2058/2058), done.remote: Total 23983 (delta 3212), reused 3376 (delta 2387), pack-reused 19530接收对象中: 100% (23983/23983), 264.01 MiB | 8.81 MiB/s, 完成.处理 delta 中: 100% (17761/17761), 完成.检查连接... 完成。登录后复制In [ ]

# 安装其他依赖%cd /home/aistudio/PaddleDetection/!pip install -r requirements.txt # 编译安装paddledet!python setup.py install# 安装模型压缩的工具库,提供剪裁、量化、蒸馏、和模型结构搜索等模型压缩策略!pip install paddleslim登录后复制注:更多安装教程请参考安装文档

3. 数据准备

3.1 数据集介绍

本案例使用数据集来源于 中国交通标志数据集的PascalVOC版本。该数据集数据分为三类:warning,prohibitory,mandatory,共13,829张图片。这里按7:1:2 分割训练集、验证集和测试集,其中包含9,680张训练集图片,1,382张验证集图片,2,767张测试集图片,部分图片如 图2 所示:

源数据格式为VOC格式,存储格式如下:

dataset ├── Annotations │ ├── 000000.xml │ ├── 000001.xml │ ├── 000002.xml │ | ... ├── Images │ ├── 000000.jpg │ ├── 000001.jpg │ ├── 000002.jpg │ | ...├── label_list.txt (必须提供)├── train.txt (训练数据集文件列表, ./Images/000000.jpg ./Annotations/xxx1.xml)├── valid.txt (测试数据集文件列表)登录后复制

通过如下命令将图片格式处理为COCO格式,执行一次即可。

In [3]# 首先解压数据集%cd /home/aistudio# 创建解压目录!mkdir dataset# 解压数据集!unzip -d dataset -q /home/aistudio/data/data51578/CCTSDB_VOC.zip# -d: 指定解压目录# -q: 不输出解压详情登录后复制

/home/aistudio登录后复制

3.2 数据集转为COCO格式

In [4]# 训练集%cd PaddleDetection/'''params dataset_type: 原数据格式 voc_anno_dir: xml标注文件夹 voc_anno_list: 训练集列表 voc_label_list: 类别标签 voc_out_name: 输出json文件'''!python tools/x2coco.py \ --dataset_type voc \ --voc_anno_dir /home/aistudio/dataset/ \ --voc_anno_list /home/aistudio/dataset/train_list.txt \ --voc_label_list /home/aistudio/dataset/labels.txt \ --voc_out_name /home/aistudio/dataset/coco_train.json登录后复制

/home/aistudio/PaddleDetectionStart converting !100%|████████████████████████████████████| 9680/9680 [00:00<00:00, 16720.55it/s]登录后复制In [5]

# 验证集!python tools/x2coco.py \ --dataset_type voc \ --voc_anno_dir /home/aistudio/dataset/ \ --voc_anno_list /home/aistudio/dataset/val_list.txt \ --voc_label_list /home/aistudio/dataset/labels.txt \ --voc_out_name /home/aistudio/dataset/coco_val.json登录后复制

Start converting !100%|████████████████████████████████████| 1382/1382 [00:00<00:00, 18472.52it/s]登录后复制In [6]

# 测试集!python tools/x2coco.py \ --dataset_type voc \ --voc_anno_dir /home/aistudio/dataset/ \ --voc_anno_list /home/aistudio/dataset/test_list.txt \ --voc_label_list /home/aistudio/dataset/labels.txt \ --voc_out_name /home/aistudio/dataset/coco_test.json登录后复制

Start converting !100%|████████████████████████████████████| 2767/2767 [00:00<00:00, 18793.01it/s]登录后复制

代码执行完成后数据集文件组织结构为:

├── coco_train.json ├── coco_val.json ├── coco_test.json │ ... ├── Images │ ├── 000000.jpg │ ├── 000001.jpg │ ├── 000002.jpg │ ├── ...登录后复制

4. 模型选择

4.1 PP-PicoDet介绍

本次任务要求速度快和精度高,因此我们选择PaddleDetection提出的全新轻量级系列模型PP-PicoDet,模型有如下特点:

更高的mAP: 第一个在1M参数量之内mAP(0.5:0.95)超越30+(输入416像素时)。更快的预测速度: 网络预测在ARM CPU下可达150FPS。部署友好: 支持PaddleLite/MNN/NCNN/OpenVINO等预测库,支持转出ONNX,提供了C++/Python/Android的demo。先进的算法: 我们在现有SOTA算法中进行了创新, 包括:ESNet, CSP-PAN, SimOTA等等。

4.2 PP-PicoDet预训练模型

PP-PicoDet提供了多种在COCO数据上的预训练模型,如下表所示:

5. 模型训练

5.1 修改训练配置文件

本项目采用PP-PicoDet作为交通标志识别的模型,模型训练需要经过几个步骤:

首先修改configs/datasets/coco_detection.yml

数据集包含的类别数:num_classes包含训练集、验证集、测试集的图片路径image_dir、标注json文件路径anno_path、数据集路径dataset_dirmetric: COCOnum_classes: 3TrainDataset: !COCODataSet image_dir: JPEGImages anno_path: coco_train.json dataset_dir: /home/aistudio/dataset data_fields: ['image', 'gt_bbox', 'gt_class', 'is_crowd']EvalDataset: !COCODataSet image_dir: JPEGImages anno_path: coco_val.json dataset_dir: /home/aistudio/datasetTestDataset: !ImageFolder anno_path: coco_test.json #labels.txt # also support txt (like VOC's label_list.txt) dataset_dir: /home/aistudio/dataset # if set, anno_path will be 'dataset_dir/anno_path'登录后复制然后修改configs/picodet/picodet_l_640_coco_lcnet.yml

预训练模型:pretrain_weights

训练超参数:epoch、batch_size、base_lr

详细配置文件改动和说明。

学习率换算方法: lr_new = lr_default * (batch_size_new * gpu_number_new) / (batch_size_default * gpu_number_default)

_BASE_: [ '../datasets/coco_detection.yml', '../runtime.yml', '_base_/picodet_v2.yml', '_base_/optimizer_300e.yml', '_base_/picodet_640_reader.yml',]pretrain_weights: https://paddle-imagenet-models-name.bj.bcebos.com/dygraph/legendary_models/PPLCNet_x2_0_pretrained.pdparamsweights: output/picodet_l_640_coco/best_modelfind_unused_parameters: Trueuse_ema: trueepoch: 200snapshot_epoch: 10LCNet: scale: 2.0 feature_maps: [3, 4, 5]LCPAN: out_channels: 160PicoHeadV2: conv_feat: name: PicoFeat feat_in: 160 feat_out: 160 num_convs: 4 num_fpn_stride: 4 norm_type: bn share_cls_reg: True use_se: True feat_in_chan: 160LearningRate: base_lr: 0.015 #0.06 schedulers: - !CosineDecay max_epochs: 300 - !LinearWarmup start_factor: 0.1 steps: 300TrainReader: batch_size: 12登录后复制

最后启动训练

PaddleDetection提供了单卡/多卡训练模型,满足用户多种训练需求,具体代码如下:

5.2 开始训练

In [ ]# 单卡GPU上训练!export CUDA_VISIBLE_DEVICES=0 !python tools/train.py -c configs/picodet/picodet_l_640_coco_lcnet.yml \ --use_vdl True --eval#中断后可使用 -r 继续训练# !export CUDA_VISIBLE_DEVICES=0 # !python tools/train.py -c configs/picodet/picodet_l_640_coco_lcnet.yml \# -r output/picodet_l_640_coco_lcnet/75.pdparams \# --use_vdl True --eval登录后复制

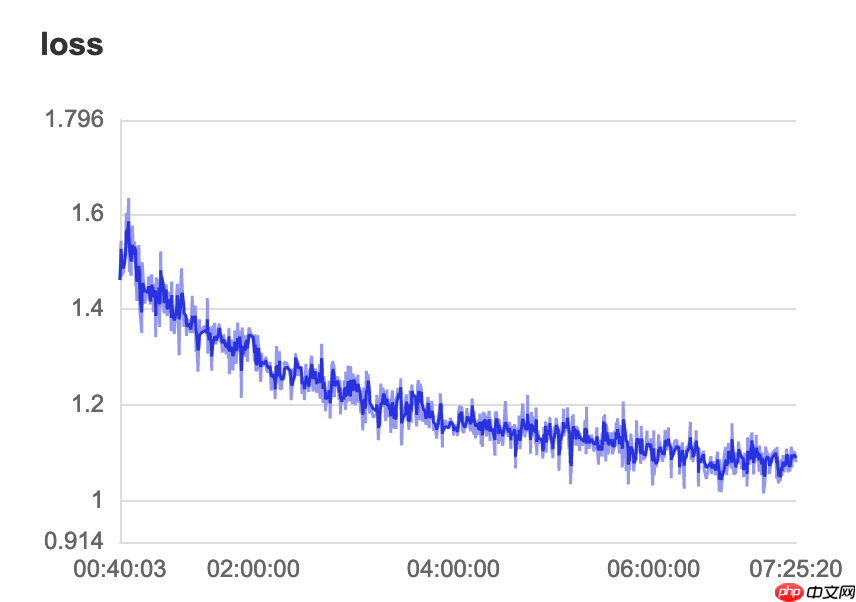

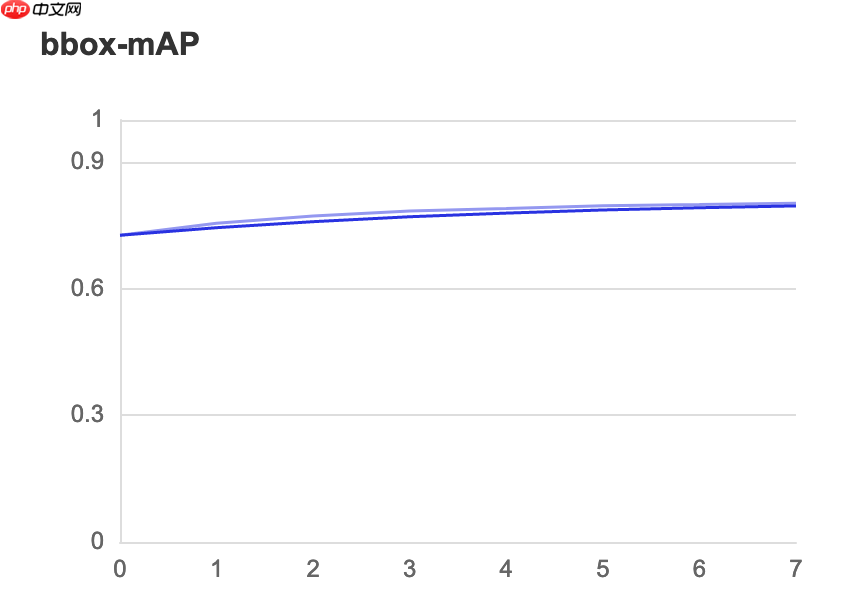

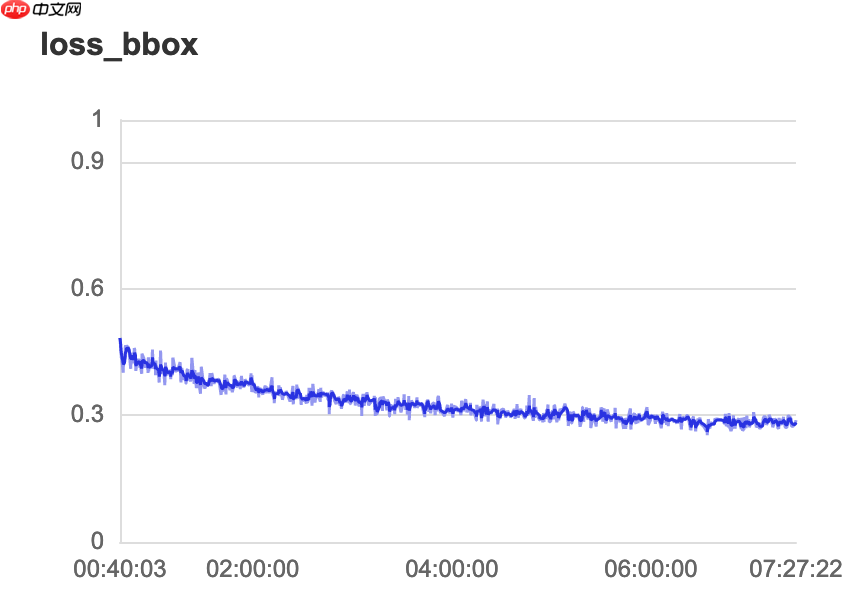

5.3 数据可视化

5.4 训练轮次对比

在不区分图片大小的情况下(area=all):

6. 模型评估

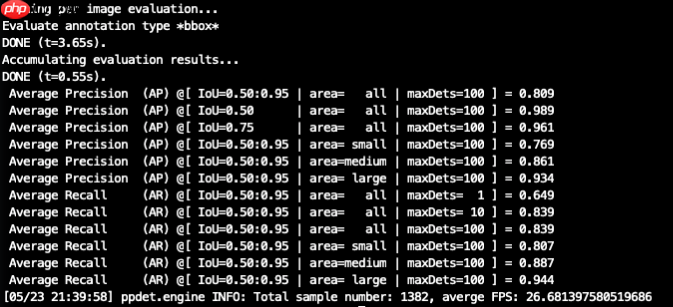

使用训练好的模型在验证集上进行评估,具体代码如下:

In [3]# 评估!export CUDA_VISIBLE_DEVICES=0!python tools/eval.py -c configs/picodet/picodet_l_640_coco_lcnet.yml \ -o weights=output/picodet_l_640_coco_lcnet/best_model.pdparams登录后复制

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/tensor/creation.py:130: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations if data.dtype == np.object:W0523 21:37:33.806679 663 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 10.1W0523 21:37:33.811025 663 device_context.cc:465] device: 0, cuDNN Version: 7.6.loading annotations into memory...Done (t=0.01s)creating index...index created![05/23 21:37:37] ppdet.utils.checkpoint INFO: Finish loading model weights: output/picodet_l_640_coco_lcnet/best_model.pdparams[05/23 21:37:39] ppdet.engine INFO: Eval iter: 0[05/23 21:38:10] ppdet.engine INFO: Eval iter: 100[05/23 21:38:32] ppdet.metrics.metrics INFO: The bbox result is saved to bbox.json.loading annotations into memory...Done (t=0.59s)creating index...index created![05/23 21:38:32] ppdet.metrics.coco_utils INFO: Start evaluate...Loading and preparing results...DONE (t=0.49s)creating index...index created!Running per image evaluation...Evaluate annotation type *bbox*DONE (t=2.98s).Accumulating evaluation results...DONE (t=0.53s). Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.809 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.989 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.961 Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.769 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.861 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.934 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.649 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.839 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.839 Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.807 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.887 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.944[05/23 21:38:37] ppdet.engine INFO: Total sample number: 1382, averge FPS: 25.858773913913232登录后复制

使用数据集中9,680张图片(整个数据集的70%)训练的模型,在包含1,382张图片的验证集上评估,效果如下,mAP(0.5)=98.9%,mAR(0.5:0.95)=83.9%

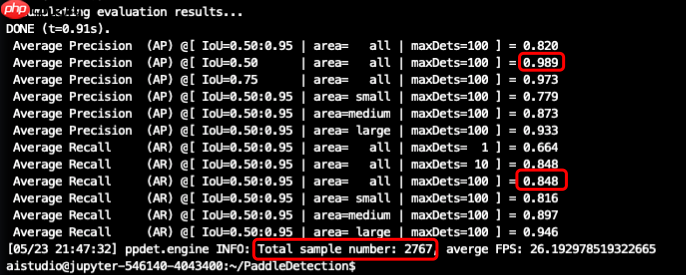

在包含2,677张图片的测试集上评估,效果如下,mAP(0.5)=98.9%,mAR(0.5:0.95)=84.8%

更换评估数据集可修改configs/datasets/coco_detection.yml中EvalDataset下anno_path的值,如coco_test.json

7. 模型预测

加载训练好的模型,置信度阈值设置为0.5,执行下行命令对验证集或测试集图片进行预测:

In [5]!export CUDA_VISIBLE_DEVICES=0''' -c:指定模型配置文件 --infer_img:测试图片 --output_dir:结果输出位置 --draw_threshold:置信度阈值 -o weights:加载训练好的模型'''!python tools/infer.py -c configs/picodet/picodet_l_640_coco_lcnet.yml \ --infer_img=/home/aistudio/dataset/JPEGImages/008839.jpg \ --output_dir=infer_output/ \ --draw_threshold=0.5 \ -o weights=output/picodet_l_640_coco_lcnet/best_model.pdparams登录后复制

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/tensor/creation.py:130: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations if data.dtype == np.object:W0523 22:12:12.803400 3736 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 10.1W0523 22:12:12.807762 3736 device_context.cc:465] device: 0, cuDNN Version: 7.6.[05/23 22:12:14] ppdet.utils.checkpoint INFO: Finish loading model weights: output/picodet_l_640_coco_lcnet/best_model.pdparamsloading annotations into memory...Done (t=0.01s)creating index...index created!100%|█████████████████████████████████████████████| 1/1 [00:00<00:00, 3.99it/s][05/23 22:12:15] ppdet.engine INFO: Detection bbox results save in infer_output/008839.jpg登录后复制

7.1 预测效果

8. 模型导出

在模型训练过程中保存的模型文件是包含前向预测和反向传播的过程,在实际的工业部署则不需要反向传播,因此需要将模型进行导成部署需要的模型格式。 执行下面命令,即可导出模型

In [6]!export CUDA_VISIBLE_DEVICES=0!python tools/export_model.py \ -c configs/picodet/picodet_l_640_coco_lcnet.yml \ -o weights=output/picodet_l_640_coco_lcnet/best_model.pdparams \ --output_dir=inference_model登录后复制

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/tensor/creation.py:130: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations if data.dtype == np.object:[05/23 22:13:23] ppdet.utils.checkpoint INFO: Finish loading model weights: output/picodet_l_640_coco_lcnet/best_model.pdparamsloading annotations into memory...Done (t=0.01s)creating index...index created![05/23 22:13:23] ppdet.engine INFO: Export inference config file to inference_model/picodet_l_640_coco_lcnet/infer_cfg.ymlW0523 22:13:27.917946 3852 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 10.1W0523 22:13:27.918013 3852 device_context.cc:465] device: 0, cuDNN Version: 7.6.[05/23 22:13:30] ppdet.engine INFO: Export model and saved in inference_model/picodet_l_640_coco_lcnet登录后复制

预测模型会导出到inference_model/目录下,包括model.pdmodel、model.pdiparams、model.pdiparams.info和infer_cfg.yml四个文件,分别表示模型的网络结构、模型权重、模型权重名称和模型的配置文件(包括数据预处理参数等)的流程配置文件。

更多关于模型导出的文档,请参考模型导出文档9. 模型推理

使用以下命令对导出的推理模型进行预测,详细教程请参考Python端预测部署:

In [29]!export CUDA_VISIBLE_DEVICES=0''' --model_dir: 上述导出的模型路径 --image_file:需要测试的图片 --image_dir:也可以指定要测试的文件夹路径 --device:运行时的设备,可选择CPU/GPU/XPU,默认为CPU --output_dir:可视化结果保存的根目录,默认为output/'''!python deploy/python/infer.py \ --model_dir=./inference_model/picodet_l_640_coco_lcnet \ --image_file=/home/aistudio/dataset/JPEGImages/008327.jpg \ --device=GPU登录后复制

----------- Running Arguments -----------action_file: Nonebatch_size: 1camera_id: -1cpu_threads: 1device: GPUenable_mkldnn: Falseenable_mkldnn_bfloat16: Falseimage_dir: Noneimage_file: /home/aistudio/dataset/JPEGImages/008327.jpgmodel_dir: ./inference_model/picodet_l_640_coco_lcnetoutput_dir: outputrandom_pad: Falsereid_batch_size: 50reid_model_dir: Nonerun_benchmark: Falserun_mode: paddlesave_images: Falsesave_mot_txt_per_img: Falsesave_mot_txts: Falsescaled: Falsethreshold: 0.5tracker_config: Nonetrt_calib_mode: Falsetrt_max_shape: 1280trt_min_shape: 1trt_opt_shape: 640use_dark: Trueuse_gpu: Falsevideo_file: Nonewindow_size: 50----------------------------------------------------- Model Configuration -----------Model Arch: GFLTransform Order: --transform op: Resize--transform op: NormalizeImage--transform op: Permute--------------------------------------------class_id:0, confidence:0.9360, left_top:[716.70,76.82],right_bottom:[761.55,118.69]class_id:0, confidence:0.9119, left_top:[649.82,103.49],right_bottom:[678.15,139.18]class_id:0, confidence:0.6749, left_top:[572.66,129.77],right_bottom:[588.33,145.87]save result to: output/008327.jpgTest iter 0------------------ Inference Time Info ----------------------total_time(ms): 1701.7, img_num: 1average latency time(ms): 1701.70, QPS: 0.587648preprocess_time(ms): 1690.10, inference_time(ms): 11.50, postprocess_time(ms): 0.10登录后复制

10. 模型量化训练

10.1 修改量化训练配置文件

通过模型量化可以提升模型速度。

我们在之前训练好的模型基础上进行量化训练,执行如下代码开始量化训练:

1)我们需要修改'configs/slim/quant/picodet_s_quant.yml'量化配置文件,将pretrain_weights参数改为量化前训练好的模型。

2)如果模型报错,调小picodet_s_quant.yml文件中的batch_size,并同时根据batch_size调整学习率lr。

#pretrain_weights: https://paddledet.bj.bcebos.com/models/picodet_s_320_coco.pdparamspretrain_weights: /home/aistudio/PaddleDetection/output/picodet_l_640_coco_lcnet/best_model.pdparamsslim: QATQAT: quant_config: { 'activation_preprocess_type': 'PACT', 'weight_quantize_type': 'channel_wise_abs_max', 'activation_quantize_type': 'moving_average_abs_max', 'weight_bits': 8, 'activation_bits':8, 'dtype': 'int8', 'window_size': 10000, 'moving_rate': 0.9, 'quantizable_layer_type': ['Conv2D', 'Linear']} print_model: Falseepoch: 50LearningRate: base_lr: 0.000025 #0.001 schedulers: - !PiecewiseDecay gamma: 0.1 milestones: - 30 - 40 - !LinearWarmup start_factor: 0. steps: 100TrainReader: batch_size: 12 #96登录后复制10.2 开始量化训练

In [ ]# 动态量化训练,单卡训练''' -c: 指定模型配置文件 --slim_config: 量化配置文件'''!export CUDA_VISIBLE_DEVICES=0!python tools/train.py \ -c configs/picodet/picodet_l_640_coco_lcnet.yml \ --slim_config configs/slim/quant/picodet_s_quant.yml --eval# 如中断 可用-r参数继续量化训练# !python tools/train.py \# -c configs/picodet/picodet_l_640_coco_lcnet.yml \# --slim_config configs/slim/quant/picodet_s_quant.yml \# -r output/picodet_s_quant/34.pdparams --eval登录后复制

10.3 模型评估及效果对比

In [21]# 量化训练结束,同上可进行模型评估,只是需要增加slim_config!export CUDA_VISIBLE_DEVICES=0!python tools/eval.py -c configs/picodet/picodet_l_640_coco_lcnet.yml \ --slim_config configs/slim/quant/picodet_s_quant.yml \ -o weights=output/picodet_s_quant/best_model登录后复制

/opt/conda/envs/python35-paddle120-env/lib/python3.7/site-packages/paddle/tensor/creation.py:130: DeprecationWarning: `np.object` is a deprecated alias for the builtin `object`. To silence this warning, use `object` by itself. Doing this will not modify any behavior and is safe. Deprecated in NumPy 1.20; for more details and guidance: https://numpy.org/devdocs/release/1.20.0-notes.html#deprecations if data.dtype == np.object:W0523 22:59:59.601784 7220 device_context.cc:447] Please NOTE: device: 0, GPU Compute Capability: 7.0, Driver API Version: 11.2, Runtime API Version: 10.1W0523 22:59:59.606324 7220 device_context.cc:465] device: 0, cuDNN Version: 7.6.[05-23 23:00:01 MainThread @logger.py:242] Argv: tools/eval.py -c configs/picodet/picodet_l_640_coco_lcnet.yml --slim_config configs/slim/quant/picodet_s_quant.yml -o weights=output/picodet_s_quant/best_model[05-23 23:00:01 MainThread @utils.py:79] WRN paddlepaddle version: 2.2.2. The dynamic graph version of PARL is under development, not fully tested and supported[05/23 23:00:04] ppdet.utils.checkpoint INFO: Finish loading model weights: output/picodet_s_quant/best_model.pdparamsloading annotations into memory...Done (t=0.01s)creating index...index created![05/23 23:00:09] ppdet.engine INFO: Eval iter: 0[05/23 23:00:41] ppdet.engine INFO: Eval iter: 100[05/23 23:01:06] ppdet.metrics.metrics INFO: The bbox result is saved to bbox.json.loading annotations into memory...Done (t=0.01s)creating index...index created![05/23 23:01:06] ppdet.metrics.coco_utils INFO: Start evaluate...Loading and preparing results...DONE (t=0.66s)creating index...index created!Running per image evaluation...Evaluate annotation type *bbox*DONE (t=3.38s).Accumulating evaluation results...DONE (t=0.64s). Average Precision (AP) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.773 Average Precision (AP) @[ IoU=0.50 | area= all | maxDets=100 ] = 0.984 Average Precision (AP) @[ IoU=0.75 | area= all | maxDets=100 ] = 0.935 Average Precision (AP) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.732 Average Precision (AP) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.821 Average Precision (AP) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.920 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 1 ] = 0.624 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets= 10 ] = 0.805 Average Recall (AR) @[ IoU=0.50:0.95 | area= all | maxDets=100 ] = 0.805 Average Recall (AR) @[ IoU=0.50:0.95 | area= small | maxDets=100 ] = 0.772 Average Recall (AR) @[ IoU=0.50:0.95 | area=medium | maxDets=100 ] = 0.852 Average Recall (AR) @[ IoU=0.50:0.95 | area= large | maxDets=100 ] = 0.932[05/23 23:01:10] ppdet.engine INFO: Total sample number: 1382, averge FPS: 23.959119777169736登录后复制

训练的量化模型,在 area=all 的情况下不同轮次的效果:

10.4 使用量化模型预测

In [ ]!export CUDA_VISIBLE_DEVICES=0# 使用量化模型预测!python tools/infer.py -c configs/picodet/picodet_l_640_coco_lcnet.yml \ --slim_config configs/slim/quant/picodet_s_quant.yml \ --infer_img=/home/aistudio/dataset/JPEGImages/008839.jpg \ --output_dir=slim_infer_output \ --draw_threshold=0.5 \ -o weights=output/picodet_s_quant/best_model.pdparams登录后复制In [19]

import cv2import matplotlib.pyplot as pltimport numpy as npimage = cv2.imread('slim_infer_output/008839.jpg')plt.figure(figsize=(7,7))plt.imshow(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))plt.show()登录后复制登录后复制

10.5 量化模型导出

In [ ]# 模型导出!export CUDA_VISIBLE_DEVICES=0!python tools/export_model.py \ -c configs/picodet/picodet_l_640_coco_lcnet.yml \ --slim_config configs/slim/quant/picodet_s_quant.yml \ -o weights=output/picodet_s_quant/best_model.pdparams \ --output_dir=inference_model# 将inference模型配置转化为json格式#!python deploy/lite/convert_yml_to_json.py inference_model/picodet_s_quant/infer_cfg.yml登录后复制In [25]

# 量化后的模型导出!ls -lh inference_model/picodet_s_quant/登录后复制

总用量 29M-rw-r--r-- 1 aistudio aistudio 1.1K 5月 23 22:43 infer_cfg.json-rw-r--r-- 1 aistudio aistudio 509 5月 23 23:02 infer_cfg.yml-rw-r--r-- 1 aistudio aistudio 23M 5月 23 23:02 model.pdiparams-rw-r--r-- 1 aistudio aistudio 193K 5月 23 23:02 model.pdiparams.info-rw-r--r-- 1 aistudio aistudio 6.1M 5月 23 23:02 model.pdmodel登录后复制

10.6 量化模型推理

In [30]!export CUDA_VISIBLE_DEVICES=0# 量化后模型推理!python deploy/python/infer.py \ --model_dir=./inference_model/picodet_s_quant \ --image_file=/home/aistudio/dataset/JPEGImages/008327.jpg \ --device=GPU登录后复制

----------- Running Arguments -----------action_file: Nonebatch_size: 1camera_id: -1cpu_threads: 1device: GPUenable_mkldnn: Falseenable_mkldnn_bfloat16: Falseimage_dir: Noneimage_file: /home/aistudio/dataset/JPEGImages/008327.jpgmodel_dir: ./inference_model/picodet_s_quantoutput_dir: outputrandom_pad: Falsereid_batch_size: 50reid_model_dir: Nonerun_benchmark: Falserun_mode: paddlesave_images: Falsesave_mot_txt_per_img: Falsesave_mot_txts: Falsescaled: Falsethreshold: 0.5tracker_config: Nonetrt_calib_mode: Falsetrt_max_shape: 1280trt_min_shape: 1trt_opt_shape: 640use_dark: Trueuse_gpu: Falsevideo_file: Nonewindow_size: 50----------------------------------------------------- Model Configuration -----------Model Arch: GFLTransform Order: --transform op: Resize--transform op: NormalizeImage--transform op: Permute--------------------------------------------class_id:0, confidence:0.9245, left_top:[716.23,76.58],right_bottom:[762.66,119.49]class_id:0, confidence:0.9099, left_top:[648.96,104.52],right_bottom:[678.67,139.30]class_id:0, confidence:0.6850, left_top:[572.70,129.55],right_bottom:[589.45,146.79]save result to: output/008327.jpgTest iter 0------------------ Inference Time Info ----------------------total_time(ms): 1740.3, img_num: 1average latency time(ms): 1740.30, QPS: 0.574614preprocess_time(ms): 1724.70, inference_time(ms): 15.50, postprocess_time(ms): 0.10登录后复制

小编推荐:

相关攻略

更多 - 豆包AI如何实现自动化部署?CI/CD流程优化方案 07.20

- 新手必看:豆包AI深度集成DeepSeek的完整设置指南 07.20

- LIC2022-知识对话任务基线系统 07.20

- 【AI达人创造营第二期】基于jetsonnano部署的手势乐器--模型部署 07.20

- 助力自动驾驶:行车检测和车道线分割 07.20

- WindowsDefender误报文件为病毒怎么处理 07.20

- 豆包AI编程操作教程 豆包AI自动编程指南 07.20

- 【一键下载安装】Paddle/环境 & Paddle套件全家桶【多平台】 07.20

热门推荐

更多 热门文章

更多 -

- 神角技巧试炼岛高级宝箱在什么位置

-

2021-11-05 11:52

手游攻略

-

- 王者荣耀音乐扭蛋机活动内容奖励详解

-

2021-11-19 18:38

手游攻略

-

- 坎公骑冠剑11

-

2021-10-31 23:18

手游攻略

-

- 原神卡肉是什么意思

-

2022-06-03 14:46

游戏资讯

-

- 《臭作》之100%全完整攻略

-

2025-06-28 12:37

单机攻略